In this article I report what I’ve learned so far about the possibility of using steerable consumer home monitoring cameras (“nanny cams”) to allow students to work together on laboratory exercises, with one student in the room and one (or more?) participating using the video and audio from the camera. (~4660 words)

Introduction and Motivation

The COVID-19 pandemic caused all instruction at SUNY New Paltz to move online in March 2020, including labs. Introductory physics labs were performed by having faculty record videos showing the apparatus and the process of collecting of data, and then students analyzed the data and wrote lab reports. This lost a number of the benefits of student lab work, including both real-time interaction with the equipment and real-time interaction with other students.

Lab students work together as “lab partners” for a number of reasons. One is that equipment can be limited, especially if it is expensive. Another is that some activities require more than one set of hands. But even when these considerations don’t apply, it has long been recognized that working together with a lab partner is a valuable part of lab. Getting an experiment to work requires problem solving and troubleshooting,1 and collaboration makes this easier and more instructive. Collaborating with a lab partner can be a form of peer instruction, which has long been recognized as a useful tool for teaching physics in both labs and in lecture classes.2

If we are able to have students in the classroom in Fall 2020 they will likely be required to wear masks and to be spread out in the classroom to preserve “social distancing.” The masks won’t be a problem, but being “spread out” is in direct conflict with working together as lab partners. One potential way to have students work together but preserve distancing would be to have one student working in the classroom and another connected via video chat. A problem with using a standard video chat application is that our laptop computers have a built-in camera that only faces the front of the computer, which does not easily give a view of the equipment. We could add an additional web camera, and then the person in the room could point it at the experiment. Either way, using the computer for video would provide a static view (which admittedly is better than nothing) and would require the student in the classroom to continually adjust the camera. A clear improvement would be if the remote student could control the camera themselves to look around as the experiment is being performed. Unfortunately, cameras which can pan and tilt (and possibly zoom)3 which are compatible with common video conferencing systems like WebEx or Zoom are expensive,4 and we would need one for each lab station.

A note on terminology: At SUNY New Paltz5 the word “hybrid” is applied to a course which has an in-classroom component and an on-line component. But this is not very specific. The hybrid courses in our department implement the “flipped classroom” method, where students view content material such as recorded lectures online and then come to class for discussion and problem solving. Having some students in the classroom and others joining remotely via computer is another variation of “hybrid” but is different from a flipped classroom. I will use the term “local+remote” to specifically mean a synchronous class or activity where some students are physically in the classroom and others join via computer, all at the same time.

Home Monitoring Camera

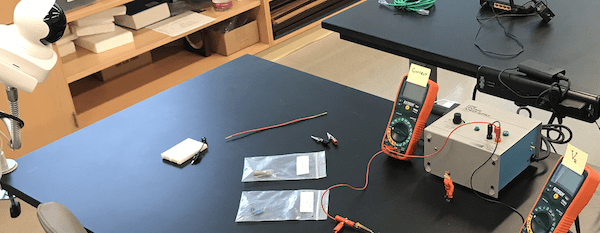

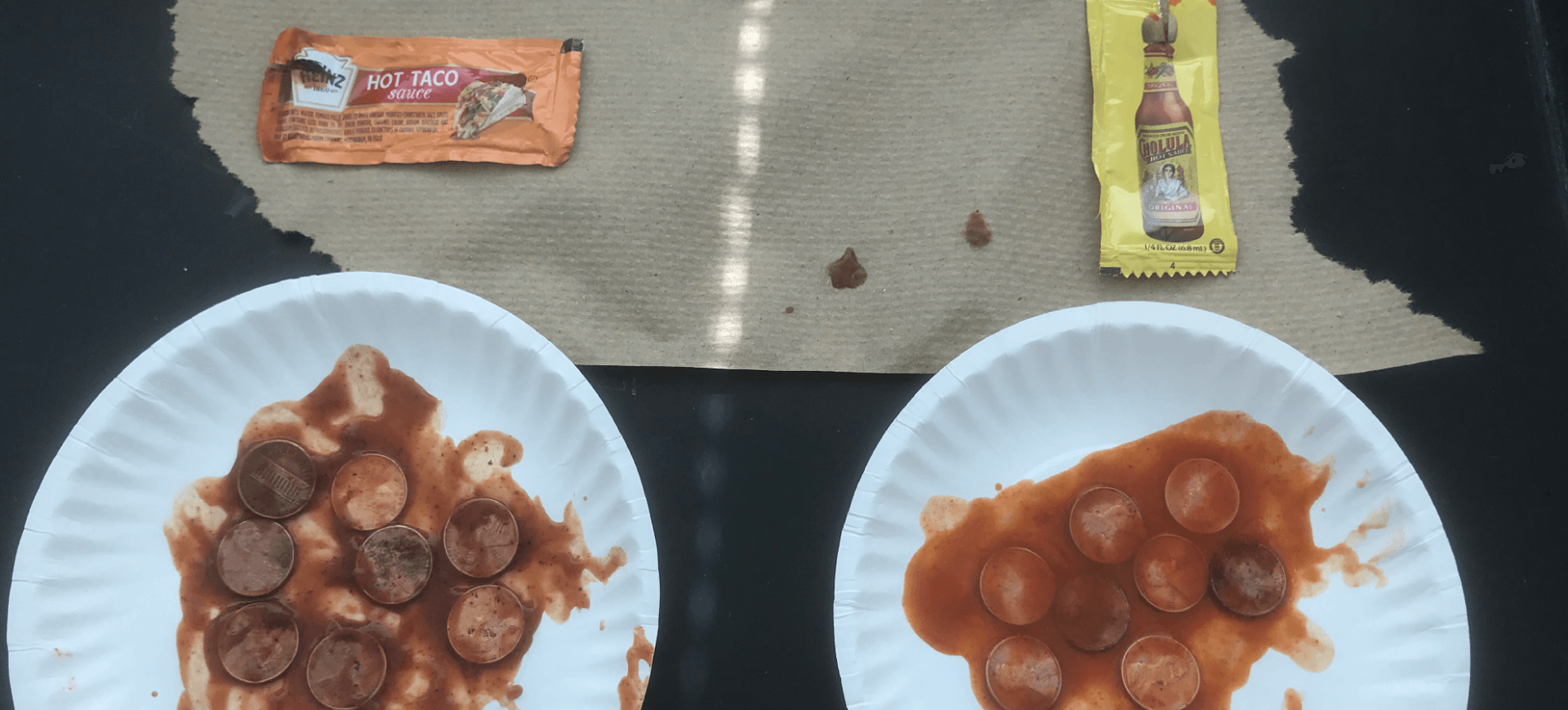

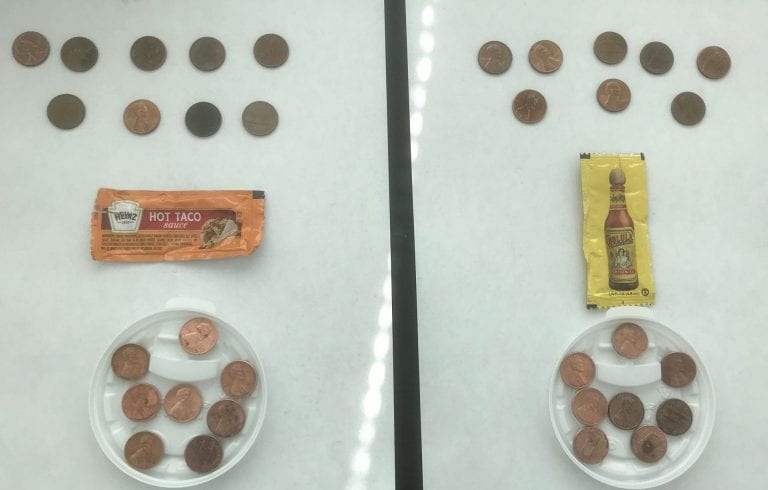

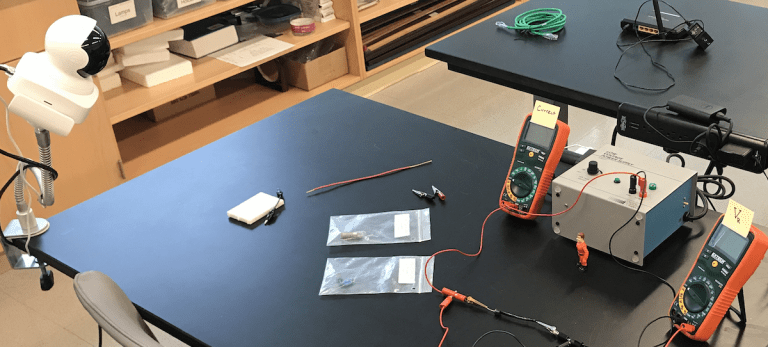

A potential alternative to an expensive steerable web camera is a commercially available home monitoring camera (aka “nanny cam”). The model I chose to test, shown in Figure 1, is the “YI-Cloud Dome Camera 1080P,” which sells for about $30 per unit.6 I chose this camera simply because I had previous experience with an earlier static camera from the same company. My family has used that camera to check up on my elderly mother when she was home alone (before the pandemic). We called it the “Granny Cam.” Other common uses are to watch pets at home or to keep an eye on a baby in another room. From my prior experience with the static camera it seemed that a similar camera which is steerable would work well for connecting virtual lab partners. And contrary to the implication in the name, use of “the cloud” for storing video is not required.

Network Configuration

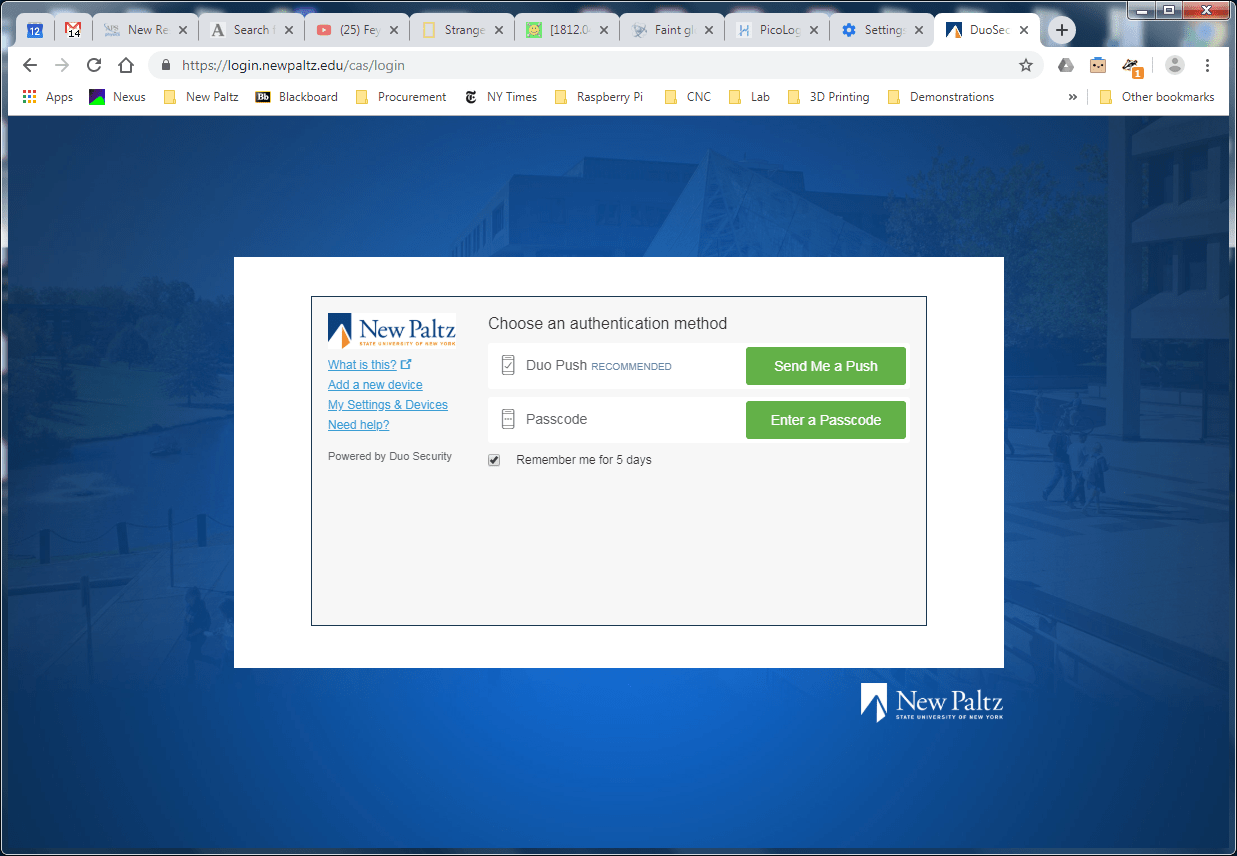

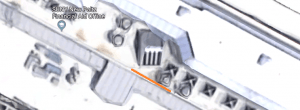

Setting up the camera is very easy in a home environment, but more complex on campus, where we use RADIUS authentication for the Wi-Fi network using individual usernames and passwords. The camera is designed to use WEP or WPA2 authentication (using the SSID of the Wi-Fi network and the (single) password for that network), as is common for most home routers. The camera has an RJ-45 jack so that it can be connected directly to wired Ethernet, but that only worked after the camera had been paired with my mobile phone app using Wi-Fi. Since my phone connects to our campus Wi-Fi using RADIUS but the camera cannot, this presented some difficulty.

One way to get around this difficulty is to pair the camera and mobile phone while using a different WPA2 network, and once that is done then the camera can be connected to wired ethernet. A remaining complication is that Wi-Fi won’t work if that WPA2 network is no longer available (e.g. if you paired the camera to a phone at home and then brought it into the lab). It is likely we would want to use the wired Ethernet in any case to avoid network interference and congestion from using many of these devices in one room. (But I also found a way around this; contact me for details.)

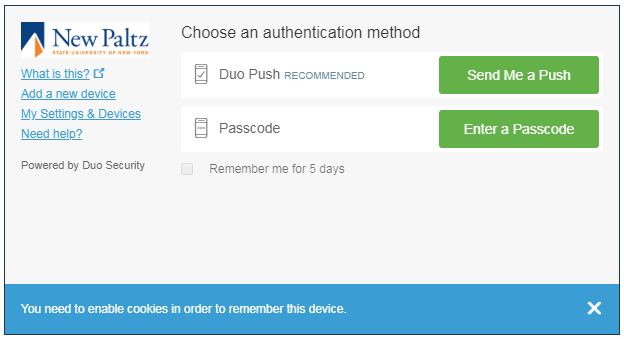

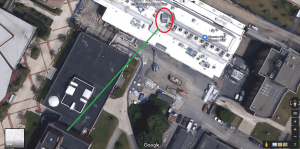

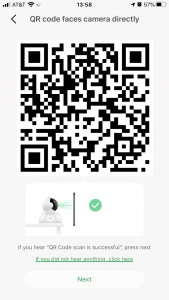

The pairing process is fairly straightforward. The camera starts in pairing mode when plugged in the first time, or after you press and hold a reset button on the back, and it gives voice prompts to indicate where it is in the process. On iOS at least you have to allow the app to obtain your location — perhaps for added security? The user enters the Wi-Fi network name (SSID) and password, which are encoded into a QR code (see Figure 2) which is displayed on the screen of the mobile device. That QR code is shown to the camera,7 which reads and decodes the QR code and uses the SSID and password to authenticate to the Wi-Fi hotspot. After only a minute or two the camera is paired to the account used on the mobile app. It can then be accessed using the manufacturer’s mobile app from any device using that same account8, and the camera can also be “shared with family” to someone using a different account (an important feature discussed further below).

Although you cannot pair the camera on wired ethernet it is possible to switch between wired ethernet and Wi-Fi. Whenever an ethernet cable is plugged in to the device it will switch to the wired connection, and when it is unplugged it will switch to Wi-Fi (if it is able to do so). I have not measured the time it takes to make these transitions

These kinds of cameras are made to allow a homeowner to see the view from the camera when they are away from home. In my lab I tested accessing the camera from off campus by turning the Wi-Fi off on my mobile phone and on an iPad and using my carrier’s mobile data network on both to verify that I could access the camera. Even though I was in the same room, the packets had to get to and from the camera by entering and leaving the campus network, and that worked fine. I later verified that I could view and control the camera when I was 10 miles from campus. There was a noticeable increase in latency when using the mobile network from a distance.

Gooseneck Mount

Placing the camera on the lab table did not give a good view of the equipment, which is also on the table, so it was necessary to lift the camera up to the eye height of a typical student. A traditional camera tripod proved to be too tall when placed on the table with legs fully retracted. The height would be easier to adjust with the tripod on the floor with legs extended, but the spread legs would then take up a lot of room that would otherwise be available to the student working at the table.

So to position the camera with the right height and direction I used instead an AboveTEK Heavy Duty Aluminum Gooseneck iPad Holder,9 which clamps to the table top, has a spring clamp which holds the camera securely by the base, and can be bent into position to match the eye height of a student but without getting too much in the way of the person working at the table (see Figures 3 and 4). The camera base was tilted downward so that the remote student could tilt the view downward to look at the table. Otherwise the downward tilt angle of the camera was too limited, while the upward tilt went all the way to the ceiling (which is not useful).

We might be able to construct our own gooseneck mounts using flexible metal wiring conduit mounted on the table with bench clamps (we have plenty of those) with a custom-made 3-D printed camera mount on the end.

Audio and Controls

A mobile app is available from the manufacturer for both iOS and Android, and testing shows that the interfaces are very similar, which means that documentation and training for students would not have to be different for the two platforms.

The camera has a two-way audio feature which lets the remote observer hear audio from a microphone built into the camera, and to say something through speakers also built into the camera. There are two different modes for the remote observer to talk. A button on the screen can be used for “push-to-talk” or “intercom mode,” meaning it has to be pressed and held while talking, which would not be the best configuration for lab partners. But the settings can be changed to “hands-free mode” so that the button turns the remote observer’s microphone on continuously until pushed again to turn it off. This would be the best way for lab partners to carry on a continuing discussion throughout the experiment. The remote student would have to be given instructions on how to change this setting, since it is not the default.

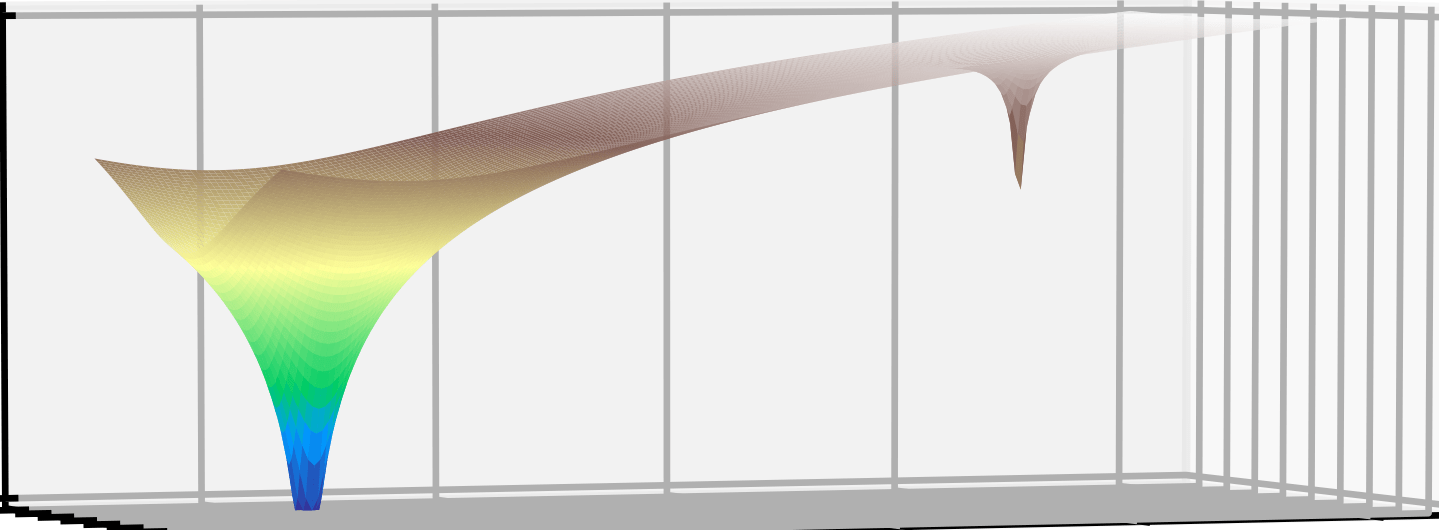

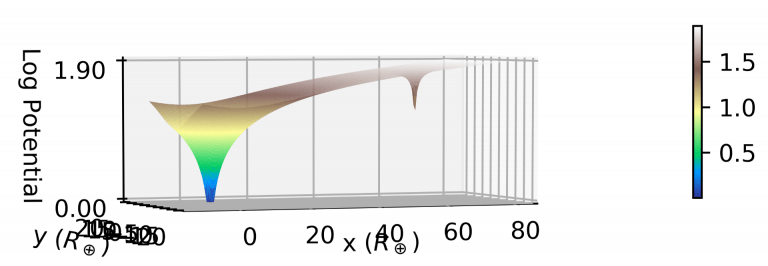

The interface for controlling the camera is easy to use, with some minor complications. The initial view is “portrait” with the steering “joystick” control prominently displayed (see Figure 5). But the view does not switch to a larger “landscape” view simply by turning the mobile device, as some might expect — one has to press the “spreading arrows” button in the lower right corner to shift to that larger view (see Figure 6). One on-screen button allows the user to turn the audio monitoring on or off (either as a toggle, or push-to-talk mode). Another lets the user take a still photo, which are saved to the camera roll on their own mobile device, and can also be saved to Google Drive or shared via email or text message. Students could use this to take a photo of the entire apparatus, or parts of it, or perhaps of just a meter reading. Another on-screen button lets the user record video from the session (again saved to their own mobile device). An example of such a video is shown in Figure 7. A student could record the whole lab, or key parts of it, and review that video later. In the expanded “landscape” view the camera steering controls are not obvious (but are also not in the way). Tapping a small icon in the upper right of the screen (see Figure 6) expands the steering controls while hiding the other audio/video controls. Tapping on the screen again will clear away all control icons. It’s all easy to use once you see it.

The steering controls are fairly responsive when one is on the same network as the camera. A student using the camera from a dorm room or other location on campus should not have any troubles steering the camera. There is a bit of a lag in the steering controls when accessing the camera from off campus. Tapping the controls and waiting to see the result leads to the desired results. There may also be an audio lag, but that has not yet been tested.

While the camera does not have a hardware zoom feature, one can zoom in software using the familiar “unpinch” gesture of spreading two fingers on the screen. The camera has two resolution modes, “SD” and “HD”. On a good network connection the HD video works well. I have not pressed the limits to see how a poor network connection degrades the video, or measured the bandwidth requirements.

Sharing the Camera

One key feature that will make it easier to use this device for connecting virtual lab partners is that a camera can be “shared with family.” This means that the camera can be paired initially with the account of the lab manager or faculty member who runs the lab and who always maintains control of the device. The video and audio from the camera can then be shared with a student who has a different account (created using their campus e-mail address), but the student cannot accidentally modify or delete the camera, and access to the camera can be revoked once the lab exercise has been completed (if that is deemed necessary).

I will also note that each camera can be given a name, and that name can be changed in the app settings. We might, for example, change the name whenever a camera is moved to a different lab station.

History Review Feature

The camera manufacturer has a subscription cloud service for saving recorded video, but this is both optional and unnecessary for our planned use of the camera. One can insert an SD card into the camera, in which case video and audio can be recorded automatically on the camera and played back by the remote observer. This would, for example, allow the remote student to go back and review something that they missed or wanted to study in more detail. The interface for this is straightforward: a time “scrubber” is shown at the bottom of the screen, and the user can drag the scrubber back to the desired time to view the recorded video (see Figure 6). This history review feature is always available to the “owner” of the camera, but must be specifically enabled for the guest account to which the camera is shared — it’s off by default.

We will have to think about how we might use this history review feature, and whether it is worth the additional expense of an SD card for each camera. The camera works fine for real-time viewing without the SD card. With the SD card it would be possible for a student with access to a particular camera to view not just their own work with their own lab partner, but the work of previous students who used the same camera. That may or may not be desirable, or worth worrying about. An instructor who was not present for the lab period could use this feature to verify attendance and participation, or to review student work to give assistance, or to evaluate problems with the equipment or lab documentation.

Desktop Client App

It would be useful for the remote student to be able to see the view from the camera on the larger screen of a desktop or laptop computer. Until recently, this particular camera manufacturer only provided apps for mobile devices, and my own survey (possibly incomplete) of similar products suggests that this is a property of the commodity market. It is a selling point for all that they work with manufacturer-specific mobile apps, many with Amazon Alexa, but there is little or no mention of access via web or desktop computer.

Fortunately, this camera manufacturer has recently released apps for both Mac and Windows. Unfortunately, the Mac app failed to run on MacOS 10.15.4 with a pop-up warning saying it “can’t be opened because Apple cannot check it for malicious software.”10 Further testing is warranted, but this is discouraging. On Windows there is a similar warning when you go to install the software, but you still have the ability to do so. Once started, the app looks a lot like a mobile app, but still with standard Windows controls in the title bar.11

The app on Windows works to view from the camera and to listen to audio, and the controls work to steer the camera, but the intercom feature is missing, so the two lab partners would not be able to talk using the camera microphone and speakers. I also had a problem creating a new camera account on the Windows app, so a mobile device may be required for that. The desktop app does show multiple cameras “owned” by the same account, so it would be handy for an instructor or lab manager to use to check on the status of all cameras while in use. But the Windows app also seems to be missing the feature to share a camera with another account, which means that a mobile device would be required to share a camera with a student for the duration of an experiment. In short, the Windows client app is behind the mobile app in several important ways.

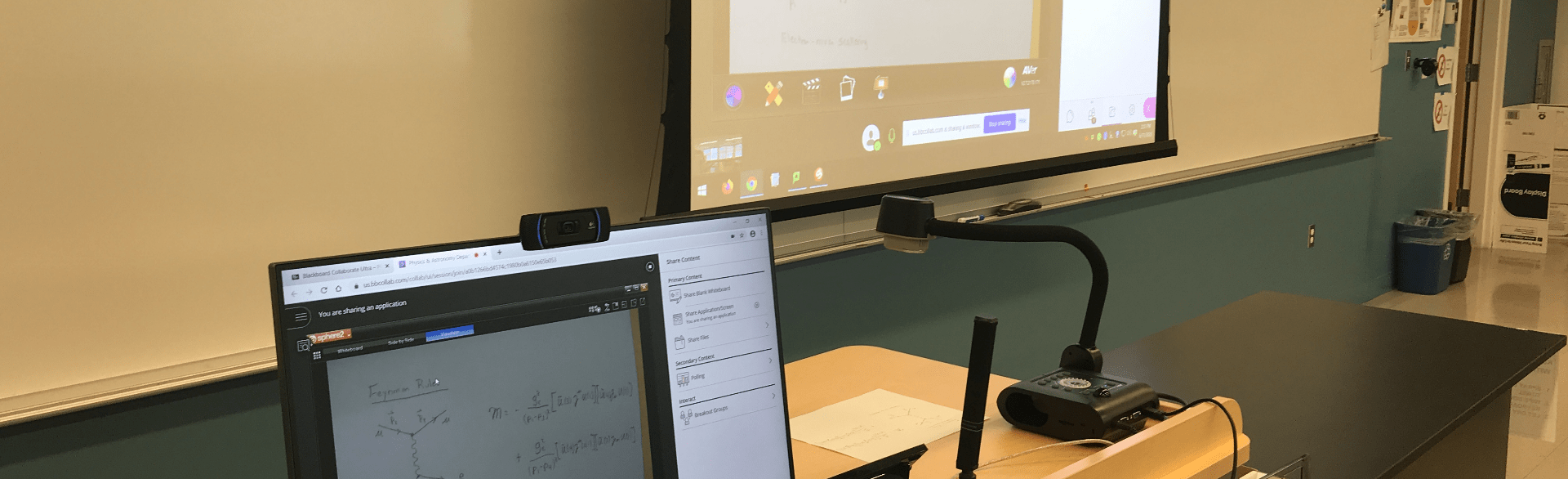

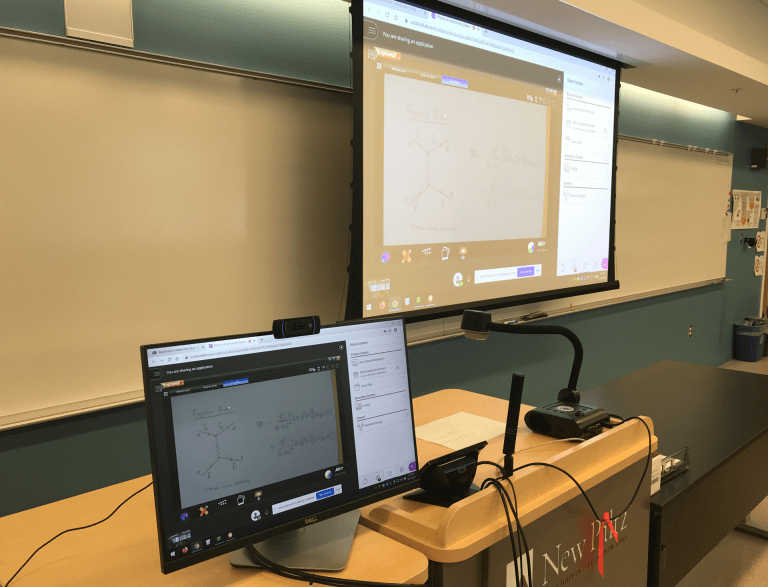

Given all this, we should keep in mind that students might concurrently use video chat software such as Blackboard Collaborate Ultra (our primary video meeting tool for classes), or WebEx (also favored on our campus). In that case the students could talk directly to each other, face to face, and use the video meeting for audio as well. Using both the steerable camera and the video chat could give the students an even better means of collaboration, and give the remote student an even greater sense of presence during the experiment. The bandwidth required to support two video streams might be a limiting factor for some students. (TODO: verify that it’s not a limit on campus.) On the other hand, the bandwidth required for even one video stream might be a problem for a student using a mobile data plan instead of Wi-Fi.

Multiple Remote Users?

Access to a camera can be shared with more than one guest observer, so it might be possible for a person physically in the lab to work with more than one remote lab partner. Initial tests of several remote observers accessing the same camera showed some intermittent video buffering, but it was not clear that this was actually due to having more than one person viewing the camera — it might have simply been that the camera was too far from the router for a reliable connection. A later test with the camera connected via wired Ethernet supported multiple users with no observed buffering. Further testing is warranted, but this is encouraging.

If more than one person can connect and use the camera at the same time, then this could also make it possible for an instructor to render assistance to students during the experiment without having to be physically present in the room, thus further supporting social distancing.

Signal Security

According to the manufacturer’s website12 the “requests” between the camera, mobile devices, and servers use secure HTTPS with a “two-way mutual authentication process to ensure that the user’s personal information is not compromised. Each device has its own key and certificate to authenticate with the server.” The video from the camera to the user device is an encrypted Peer-to-Peer connection so that “only the end user can view the video content. Any possible interception happening during transmission information will only see scrambled and encrypted data.”

I also note that the sharing system is designed to limit access to the camera to only the “owner” and to someone else the owner can designate via their campus e-mail address. We can also revoke the share after the lab exercise is over, if we need to do so. It is further possible to add a PIN code to the camera, so that the user has to enter that code to access the video stream even if it is shared with them. Then access security is insured by both something they have (their phone with the app) and something they know (the PIN code).

Other Camera Features

Since this is a camera for home monitoring, it has other features that are probably not useful for the local+remote classroom use case, but some should be mentioned if only to warn the user to disable them lest they get in the way:

- The camera has a motion detection feature where it automatically points to the source of motion. This does not work well in the lab during an experiment. It is off by default (TODO: verify) and in any case should be disabled for lab work.

- The history review feature can also be configured to only record when motion is detected. That might be useful for helping find a particular bit of video based on past activity, not just time, especially if there are long breaks between data-taking sessions. (It is not necessary to worry about filling the SD card with video, as the device simply records over the oldest previous recordings.)

- The status light on the front of the camera base can be configured to stay off when the camera is in use. When it is on, the light flashes if there are network problems, and having the light on would be a reminder to the students that the camera is operating, so this feature should not be used.

- The image can be rotated upside down, for mounting the camera on the ceiling. Maybe there is a way to use this to give the remote student a better view?

- There is a “crying baby” detection feature which one hopes is not necessary (but putting our students through all these complications just to get their degree might trigger some justified crying).

- The camera has infrared LED lighting which can be used to view and record in otherwise total darkness. We probably don’t need this feature.

- The camera microphone can be disabled in the settings. But since it can also be turned on or off by the primary user controls, totally disabling it would only be useful if we always use video chat in addition to the steerable camera, and it would be confusing if students decided later to use the camera audio.

Other Manufacturers?

I only tested this single camera from one camera manufacturer. I might test others, and I might even post a comparison. Or maybe not, if we decide that this camera satisfies all our requirements. Because these are commodity devices for home use I expect that similar cameras on the market from other manufacturers will have similar features. I would welcome reports from readers about which needed features are present or absent in some other make of home camera.

If using these cameras to enable virtual lab partner collaboration works as expected, the market for such devices might get tighter, just as it is tight now for webcams. In that case using cameras from other manufacturers, and maybe even mixing them, might become a necessity.

Legal Concerns

Even though it appears that there are no major technical problems with using this kind of camera as proposed, there may well be legal or policy hurdles to be surmounted. At SUNY New Paltz I’m told that installing devices that capture video or audio from a classroom space requires approval from the University Police Department (UPD), and possibly the HR department as well. I am hopeful that we can get such approval, because we already have lecture capture cameras installed in our labs, and those must have already been approved.

Concern has also been raised that we must insure that we comply with NY State Penal Code Article 250.00, which deals with wiretapping and interception of electronic communications. One type of crime described there involves a third party who intercepts signals meant for someone else. If the peer-to-peer video stream between camera and observer is properly encrypted, as the manufacturer claims, then wiretapping and interception by a third party should not be possible, though our IT staff may need to verify those claims of encryption and that it is adequate. Another type of crime applies to someone who sets up “an imaging device to surreptitiously view, broadcast or record a person” in various private situations (NYS Penal Code Section 250.45). Clearly, lab cameras used as described above are not “surreptitious.” Furthermore, according to NYS Penal Code Section 250.65 the provisions of §250.45 do not apply to “video surveillance devices installed in such a manner that their presence is clearly and immediately obvious.” As long as the cameras are as visible to students as they are in the photos above, with the blue light on to show that they are operating, then there should be no problem. However, it is clear that the University Police and lawyers will have to render judgement on all this at some point.

The purpose of this article has been to see if there are any technical hurdles that prevent the use of commodity “nanny cameras” from being used to enable virtual lab partner collaboration, before we get as far as involving administrators and lawyers. So far, so good.

Notes and References

- Troubleshooting equipment is an excellent model of the scientific process of forming a hypothesis and then testing it. ↩

- See, for example, Peer Instruction, A User’s Manual, by Eric Mazur (Prentice Hall, 1997) ↩

- Cameras that can Pan and Tilt and Zoom are called “PTZ” cameras. ↩

- A Logitech PTZ Pro 2 Camera costs over $750 on Amazon, or $850 direct from the manufacturer. ↩

- It may be that “hybrid” has the same meaning throughout SUNY, but I have yet to confirm this ↩

- The current price for the YI-Cloud Dome Camera 1080P from the manufacturer is $33.99, with free shipping only on orders over $35. The current price on Amazon is $29.99 with free shipping. ↩

- I tried saving a screen shot to use later to add another camera, but I later found this doesn’t work. The information in the QR code is not encoded as plain text, and so likely includes a time or location to prevent just such a “replay attack”. ↩

- Beware, it seems that an account on the mobile device and an account on the manufacturer’s web site are not the same thing. ↩

- AboveTEK Heavy Duty Aluminum Gooseneck iPad Holder, about $30 from Amazon at the time of purchase, but the price keeps going up. I also found these useful for making home-made document cameras. I suspect the price has gone up because others have discovered that too. ↩

- On an older Mac I was told it require MacOS 10.11 or later. Alas, that machine was too old to run the app. ↩

- Watching the libraries that were installed with the Windows app showed standard graphics libraries and the Qt interface library. ↩

- “How do I ensure the security and privacy of my videos?” YI Technology Help Center, https://help.yitechnology.com/hc/en-us/articles/234469188-How-do-I-ensure-the-security-and-privacy-of-my-videos- ↩