May 2024

Rethinking Assessment for Generative AI

- AI Has Changed Learning, Why Aren’t We Regulating It? According to Marc Watkins’s sobering analysis, Educators need to be prepared for the changes AI tools like GPT-4o bring to the learning process. Existing regulations, such as FERPA, COPPA, IDEA, CIPA, and Section 504, must be considered for AI tools in education. (Rhetorics; May 14, 2024)

- GenAI Strategy: Attack Your Assessment Ed’s Rec Leon Furze, as usual, is on target, this time with this call to revisit faculty approaches to assessment. The central theme is the necessity for educators, regardless of their field, to critically evaluate and, if necessary, revise their assessment strategies. This involves using GenAI tools to simulate student responses to current assessment tasks, thereby revealing vulnerabilities. The author provides practical steps for educators to engage with AI technology during faculty meetings and use it to test the robustness of their assessment tasks. (Leon Furze; May 13, 2024)

- Experts Predict Major AI Impacts in New Report The Educause report, which includes the opinions of higher ed and tech experts, highlights the potential transformation AI can bring to teaching, learning, student support, and institutional management. It emphasizes the importance of preparing educators and institutions for this AI-driven shift in education to maximize its benefits. (Inside Higher Ed; May 13, 2024)

- The Good, the Bad, & the Unknown of AI In a keynote at UMass, Lance Eaton focused on both the challenges and successes of generative AI. Two important points: AI can assist in navigating tedious tasks and projects, minimizing energy drain and AI’s fallibility can heighten awareness and scrutiny of the information received (AI + Education = Simplified; May 13, 2024)

- Harnessing the Power of Generative AI: A Call to Action for Educators Ripsimé K. Bledsoe (Texas A&M) argues that if educators steer the integration of AI with intention and purpose, it can reach its potential as an immense and versatile student success tool. She offers six areas on which to focus efforts. (Inside Higher Ed; May 10, 2024)

- No One Is Talking about AI’s Impact on Reading AI assistants can now summarize and query PDFs (this editor uses it), highlighting the potential impact on reading skills. While AI assistants can help many readers, including neurodiverse and second-language learners, these tools may wind up hindering students’ ability to critically engage with texts and form nuanced conclusions. Watkins suggests active reading assignments, social annotation tools, and group discussions can encourage students to focus on the act of reading and not rely solely on AI assistants. (Rhetorics; May 3, 2024)

- Ditch the Detectors: Six Ways to Rethink Assessment for Generative Artificial Intelligence Ed’s Rec Leon Furze again writes a prescient article, this time about assessment. (Leon Furze; May 3, 2024)

- GenAI Strategy for Faculty Leaders Ed’s Rec. Leon Furze offers a blueprint for developing AI guidelines. A great resource. (Leon Furze; May 1, 2024)

- New AI Guidelines Aim to Help Research Libraries The Association of Research Libraries announced a set of seven guiding principles for university librarians to follow in light of rising generative AI use. (Inside Higher Ed; May 1, 2024)

April 2024

AI Detection in Education Is a Dead End Researcher-PhD candidate Leon Furze’s blog post is a well-written exploration—and explanation—about AI detection software and its impact on students. For those who don’t want to wade through the study below, this is a friendly interpretation of some of the findings. (April 9, 2024)

**New Study**GenAI Detection Tools, Adversarial Techniques and Implications of Inclusivity in Higher Education From the article’s abstract: The results demonstrate that the detectors’ already low accuracy rates (39.5%) show major reductions in accuracy (17.4%) when faced with manipulated content, with some techniques proving more effective than others in evading detection. The accuracy limitations and the potential for false accusations demonstrate that these tools cannot currently be recommended for determining whether violations of academic integrity have occurred, underscoring the challenges educators face in

maintaining inclusive and fair assessment practices. However, they may have a role in supporting student learning and maintaining academic integrity when used in a non-punitive manner.

- Responsible AI and the ‘Future of Skills’ A gathering hosted by ETS delved (!) into how AI could change how students are tested and how employers assess skill (Inside Higher Ed; April 26, 2024)

- Good Ideas: When to Use AI for Brainstorming Another great blog post by Leon Furze, making the point that humans may be better off trying to brainstorm without AI first. Great read. (Leon Furze; April 25, 2024)

- Making Meaning with Multi-Modal GenAI Leon Furze has become an important voice when it comes to reflecting upon genAI. Take a look at this overuse of multi-modal communication. (Leon Furze; April 23, 2024)

- Students Putting AI to Work A brief article from Gonzaga University, illustrating gen AI applications in the classroom and student attitudes to the technology. (April 23, 2024)

- Another Workshop for Faculty and Staff Lance Eaton reflects on a recent faculty workshop and provides access to his slide deck and other material. (AI + Education= Simplified; Lance Eaton; April 23, 2024)

- Innovation Through Prompting: Democratizing Educational Technology In this post, Ethan Mollick examines how gen AI enables non-techies to leverage tech tools in interesting ways. (One Useful Thing; April 22, 2024)

- AI and the End of the Human Writer The article explores the impact of AI on writing, noting how language models like ChatGPT can produce diverse literary forms without experiencing the existential doubts that plague human writers. Will true human writing, with its quirks and authenticity, survive? The author is not so sure. (The New Republic; April 22, 2024)

- It’s the End of the Web as We Know It (The Atlantic; April 22, 2024)

- On Building an AI Policy for Teaching and Learning (with a link to the actual document here); Lance Eaton, working with students and faculty from College Unbound created well-thought out guidelines for both student and faculty use of AI. (AI + Education= Simplified; Lance Eaton; April 17, 2024)

- Generative AI Doesn’t ‘Democratize Creativity‘ Ed’s Rec Leon Furze’s answer to Ethan Mollick. Great! (Leon Furze; April 16, 2024)

- AI and Higher Education: Scenes from ASU-GVS (Arizona-State-Global-Silicon-Valley, yes, it’s a thing, a big thing. And this year, gen AI was at the center of the discussion. (Bryan’s Substack; 16 April 2024)

- Annual Provosts’ Survey Shows Need for AI Policies, Worries Over Campus Speech The title says it all. (Inside Higher Ed; April 16, 2024)

- 9 Point Action Plan: For Generative AI Integration into Education Brent Anders blog about his new book. (SOVOREL; April 14, 2024)

- What Just Happened, What Is Happening Next Ed’s Rec. Ethan Mollick gives his readers an update on the tasks that generative AI is now able to do well. According to Mollick, the capabilities of LLM are doubling every 5-14 months, which is impressive. As usual with Mollick’s posts, this is well worth the read. (One Useful Thing: April 9, 2024).

- Research Insights #7: Open Educational Resources & Generative AI Part I Ed’s Rec. This is Lance Eaton’s latest commentary on scholarly articles about generative AI. This research looked at AI + OER within the context of UNESCO’s recommendations about OER. Eaton notes that insights from the study can guide educational developers in creating strategies that leverage AI to increase student engagement and participation. (AI + Education = Simplified; April 9, 2024)

- More Teachers Are Using AI Detection Tools: Here’s Why That May Be a Problem The article’s title indicates the topic. (Education Week; April 5, 2024)

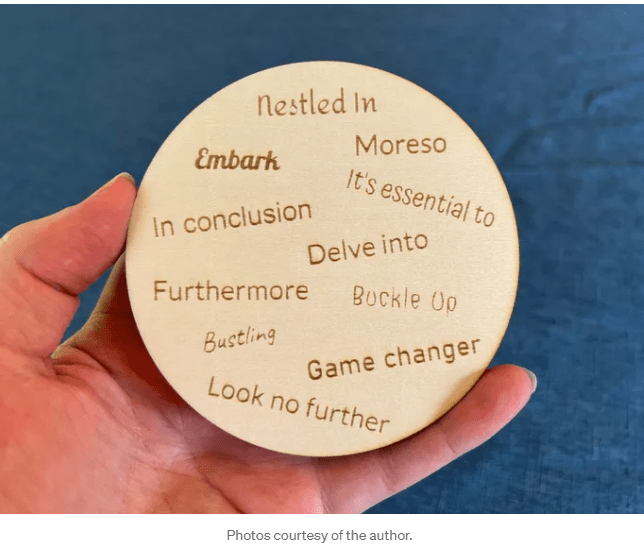

- I Turned ChatGPT’s Horrible Writing Cliches into a Handmade Coaster Behind a paywall, but you don’t really need to read it. The lovely coaster is pictured below. (The Generator; April 2, 2024)

March 2024

Report: The Advantages that AI Brings to Higher Ed (Link to report included) A report highlights AI’s potential to enhance higher education through student support and data analysis, emphasizing the importance of equitable access and culturally aware design to prevent a new digital divide and ensure HBCUs and MSIs benefit without falling behind. (Diverse Issues in Higher Education; March 13, 2024)

- AI-Generated Garbage Is Polluting Our Culture Peer reviewers are using gen AI to write their reviews? Apparently. This is just one cultural shift that Erik Hoel derides in this critique of gen AI (NYTimes; March 31,, 2024)

- On the Necessity of a Sin: Why Treating AI Like a Person Is the Future Ed’s Rec. Well worth the read. Ethan Mollick looks at AI anthropomorphization. (One Useful Thing: March 30,2024)

- Assessment of Student Learning Is Broken And Generative AI Is the Thing That Broke It Ed’s Rec This cri du coeur about student use of generative AI includes a year long break from program assessment, say the authors. They also call on administrative leadership, including by members of accreditation organizations, about the use of generative AI. (Inside Higher Ed; March 28, 2024)

- The Enduring Role of Writing in the AI Era Ed’s Rec. Thoughts from the perspective of a First-Year Writing Instructor and Director of the Mississippi AI institute. (Rhetorica; March 2024)

- I Cyborg: Using Co-Intelligence Ethan Mollick discusses the integral role AI played in writing his book Co-Intelligence, exploring the concepts of cyborg and centaur collaboration between humans and AI, and speculates on the evolving capabilities of AI in creative processes. (One Useful Thing; March 14, 2024)

- How the AI that Drives ChatGPT Will Move the Physical World A startup by former OpenAI researchers is advancing AI to enable robots to perform tasks like sorting and moving items in warehouses. (NY Times; March 11, 2024)

- AI Is Learning What It Means to Be Alive AI-driven research identified the Norn cell, a crucial element in oxygen regulation, demonstrating the profound impact of artificial intelligence on accelerating the discovery and understanding of complex biological mechanisms. (NY Times; March 10, 2024)

- What Sora Might Mean for Higher Education (Bryan’s Substack; March 5, 2024)

- My Kids Will Fancy Generative AI. I Choose to Fight It Another thoughtful piece by Alberto Romero who says he does not want to imagine a world in which humans don’t write anymore. (The Algorithmic Bridge; March 5, 2024)

- Captain’s Log: The Irreducible Weirdness of Prompting AIs Ed’s Rec. Classic Ethan Mollick. He believes that while there is no exact science to prompt engineering, expertise in crafting effective prompts empowers AIs to achieve more than initially apparent. Worth the read. (One Useful Thing; March 4, 2024)

- Will Large Language Models Really Change How Work Is Done? (MIT Sloan Review; March 4, 2024)

- Student Fights AI Cheating Allegations for Using Grammarly A sobering look at the near future—or actually, right now. (The Hill; March 4, 2024)

- On Not Using Generative AI Ed’s Rec Lance Eaton reflects on his personal experiences for using generative AI—and when he does not. (AI + Education = Simplified; March 3, 2024)

February 2024

2024 Educause AI Landscape Study Ed’s Rec. This is an important study, looking at a number of areas, including strategic planning. (Educause: Feb. 2024)

- While AI Companies Promise Heaven, Underage Workers Are in a Hellhole Ed’s Rec. For Medium Subscribers. Alberto Romero’s sobering article juxtaposes the grand promises of AI companies with the harsh reality of underage workers training AI. Really worthy of a close read. Romero references this article from Wired: Underage Workers Are Training AI (Feb. 28, 2024)

- AI Will Shake Up Higher Ed. Are Colleges Ready? (A lot rides on the answer . . . ) Ed’s Rec. (Chronicle for Higher Ed; Feb. 26, 2024)

- Things to Consider Before All in Favor of AI Ed’s Rec. This thoughtful piece looks at how generative AI can be constraining when writing a rough draft. The author also looks at why high-stakes writing (as in CVs) requires more than an AI generated document. This is a good article to share with students. (Inside Higher Ed; Feb. 26, 2023)

- Notes on Sora: Thinking about Video Generation and the Future Ed’s Rec. Sora is OpenAI’s new video creation tool that will soon be made available to the public. Bryan Alexander takes a look at how this tool may impact the future of video production. (Bryan’s Substack; Feb. 23, 2023)

- Christopher Andreola’s YouTube Video Resource List This YouTube collections includes some useful AI-focused videos, in particular for students in graphic design. Take a look! (Design; SUNY New Paltz);

- AI & The Copyright & Plagiarism Dilemma As Lance Eaton notes, potential lawsuits against AI companies may lead to a rethinking of copyright in the digital age. A very thoughtful parsing of legal terms, plagiarism, and “transformative use.” (AI + Education = Simplified; Feb. 16, 2023)

- How AI Has Begun Changing University Roles, Responsibilities A survey by Educause found that more faculty members and university leaders are starting to work with artificial intelligence in their roles. A lack of formalized training in AI was observed, with only 56 percent of universities training faculty members and even lower percentages for staff and students. (Inside Higher Ed; Feb. 13, 2024).

- AI: The Unseen Ally in Mastering Deep WorkEd’s Rec Srinivas Rao makes a wonderful observation about how AI can enhance our capacity for deep work by helping to master complex things quickly and work at high levels of depth. Well worth the read. (Medium; Feb. 9, 2024)

A short 15-minute by ed technologist Lance Eaton about how faculty and instructional designers can approach the use of generative AI:

- Google’s Gemini Advanced: Tasting Notes and Implications Ethan Mollick does not provide a detailed review of Gemini but makes several broad statements about its capabilities. Gemini Advanced shares similarities with GPT-4 but also has its own strengths and weaknesses and provides insight into the future of AI development and the emergence of advanced AI models. Gemini Advanced signifies the start of a wave of AI development rather than the end. It suggests the potential for future AI agents to function as powerful assistants. (One Useful Thing; Feb. 8, 2024)

- AI Content Vs a Top 1% Writer (Dan Martin from AI Monks; behind paywall on Medium, but you should be able to see the opening paragraphs). Here is a good summary of Martin’s findings about ChatGPT at this moment in time:

Comparison of AI-generated writing and human-written content highlights limitations and emphasizes the need for human creativity and originality in content creation:

-

AI’s Limitations in Writing:

- AI-generated writing lacks readability and quality, and is incapable of producing new ideas and insights without heavy prompting.

- AI writing tools like ChatGPT simply replicate what’s already out there, using different phrasing to give the illusion of being creative.

-

Use of ChatGPT for Idea Generation:

- ChatGPT can assist in brainstorming and suggesting themes and ideas based on the user’s inputs.

- It can also help flesh out rough drafts and provide structure, making it a valuable tool for generating content ideas.

-

Overcoming Writer’s Block with ChatGPT:

- When struggling with writer’s block, users can input a rough outline or bullet points into ChatGPT to kickstart creativity and get the writing process moving again.

- This demonstrates the potential of ChatGPT as a creative ally rather than a lazy shortcut.

-

ChatGPT’s Role in Content Quality Enhancement:

- ChatGPT can also be used to proofread content, check for grammatical errors, and suggest improvements in readability, thereby enhancing content quality.

- Users should ensure to balance ChatGPT’s outputs with their unique voice and style and verify information for ethical and quality considerations.

-

AI Content vs. Human Writing:

- The comparison between AI-generated writing and human-written content highlights the limitations of AI in terms of context understanding, accuracy, and genuine creativity.

- It emphasizes the need for human creativity and originality in content creation despite the assistance of AI tools like ChatGPT.

-

Differences in Writing Styles:

- AI-generated content can be identified by specific words and phrases it overuses, such as ‘ever-evolving landscape,’ ‘harness,’ ‘delves,’ and an overuse of semi-colons.

- Human writing exhibits perplexity and burstiness, characteristics that AI struggles to replicate, leading to more robotic-sounding content.

-

- The AI Revolution in Higher Ed Even keeping in mind that Grammarly helped to produce this booklet, one has to say it still provides some useful data and interesting ideas. (Feb. 2024)

- Is AI Just a Tool for Lazy People? Short answer: No. Mark Herschberg’s (MIT) conclusion is that generative AI is actually being leveraged effectively by highly engaged professionals. (Medium; Feb. 7, 2023)

- Wisdom Skills Are Hard to Teach—AI Can Help The author makes the case that Experiential learning through AI-powered games can address the shortage of extended on-the-job experience, offering the potential for unlocking big-picture cognition.(Inside Higher Ed; Feb. 7, 2024)

- Generative AI, Bullshit as a Service As Alberto Romero points out, AI is being used for dishonest and malicious purposes, from generating disinformation to creating spam. While these uses are disturbing, Romero argues that despite dire warnings of catastrophic outcomes, AI is primarily used to create “BS.” A philosophical-lite treatise, worth a read. (The Algorithmic Bridge; Feb. 6, 2024)

-

Pinging the scanner, early February 2024 Bryan Alexander takes a look at recent AI and tech updates from Google, Amazon and Microsoft. Yes, Co-Pilot will be ubiquitous. Also, programmers are designing “hostile AI architecture” in the hopes of addressing copyright infringement issues. Below, you will find the OG Rufus, the Welsh Corgi after which Amazon programmers have named their chatbot shopping assistant. (Bryan’s Substack; Feb. 6, 2024)

The Original Rufus

Education Week: Spotlight on AI Ed’s Rec This compilation of articles about generative AI in the k-12 space is very helpful. (Education Week; Feb. 2024)

- 7 Questions College Leaders Should Ask about AI Presidents and others should be developing strategies to ensure their institutions are positioned to respond to the opportunities and risks, writes David Weil (Brandeis). (Inside Higher Ed; Feb. 1, 2014).

January 2024

- What Can Be Done in 59 Seconds: An Opportunity (and a Crisis) Ed’s Rec. Mollick reflects on how generative AI has proven to be a powerful productivity booster, with evidence for its effectiveness growing over the past 10 months. The wide release of Microsoft’s Copilot for Office and OpenAI’s GPTs has made AI use much easier and more normalized. (One Useful Thing; Jan. 31, 2024)

- The Biggest AI Risk in 2024 May be behind a paywall, but I will provide a summary. Actually, Thomas Smith traces several big issues: Data Privacy (1 in 10 medical providers use ChatGPT, which means patient data is likely being compromised); Copyright Issues and Hallucinations, of course; However, Smith sees the biggest risk of generative AI is . . . pretending it doesn’t exist and not learning how to use it ethically. His focus is primarily on business, but this observation is worth considering: “Avoiding grappling with AI challenges is itself a decision.” (The Generator; Jan. 26, 2024)

-

Embracing AI in English Composition Ed’s Rec. From the abstract: A mixed-method study conducted in Fall 2023 across three sections, including one English Composition I and two English Composition II courses, provides insightful revelations. The study, comprising 28 student respondents, delved into the impact of AI tools through surveys, analysis of writing artifacts, and a best practices guide developed by an honors student. (International Journal of Changes in Education; Jan. 22, 2024)

- Last Year’s AI Views Revisited Ed’s Rec. Another great read, this time by Lance Eaton. In terms of higher education, Eaton makes the point the importance of faculty fully understanding the technology and shaping its use in the classroom to mitigate emerging problems. Even if you are not all that interested in generative AI, this article is worth the read. (AI+Education = Simplified; Jan. 24, 2024)

- ChatGPT Can’t Teach Writing: Automated Syntax Generation Is Not Teaching Ed’s Rec John Warner steps in to fire back at OpenAI’s partnership with Arizona State. (Inside Higher Ed; Jan. 22, 2024)

- What Happens When a Court Cuts Down ChatGPT? Ed’s Rec. Not an idle question posed by futurist Byran Alexander. (Byran’s Substack; Jan. 21, 2024)

- ChatGPT Goes to College Bret Kinsella muses over the ways OpenAI’s partnership with Arizona State will benefit both parties. (Synthedia; Jan. 20, 2024)

- OpenAI Announces First Partnership with a University According to the article, “Starting in February, Arizona State University will have full access to ChatGPT Enterprise and plans to use it for coursework, tutoring, research and more.” (CNBC; Jan. 18, 2024)

- AI Dominates Davos CNBC It’s that time of year! (Jan. 17, 2024)

- AI Writing Is a Race to the Bottom by Alberto Romero, The Algorithmic Bridge Romero’s article discusses how AI writing tools, while offering convenience and efficiency, create a competitive environment that forces human writers to use these tools, ultimately sacrificing the uniqueness of human writing to Moloch, the system of relentless competition. (Jan. 17, 2024)

- The Lazy Tyranny of the Wait Calculation by Ethan Mollik, One Useful Thing. Mollick introduces the concept of a “Wait Calculation” in the context of AI development, where waiting for advancements in AI technology before starting a project can sometimes be more beneficial than immediate action, highlighting the rapid pace of AI development, its potential to impact various fields, and the need to consider the timeline of AI progress in long-term decision-making. (Jan. 16, 2024)

- Who Is ChatGPT? by Dean Pratt, AI Mind A fascinating—or really creepy, depending upon your POV—article in which the author explores a philosophical conversation with an AI entity named Bard, discussing the potential future where AI technology becomes a co-creator and catalyst for experiences blending the real and dreamlike, as well as the importance of empathy, optimism, and interconnectedness in the interaction between humans and AI. (Jan. 14, 2023)

- Creating a Useful GPT? Maybe . . . Lance Eaton has been experimenting with creating customized GPTs. The article explains how one can go about doing it and discusses their limits as well as their promises for the future. (AI + Education = Simplified, Jan. 8, 2024)

**Important OER Resource from Oct. 2023: TextGenEd: Teaching with Text Generation Technologies Edited by Vee et al. WAC Clearing House***At the cusp of this moment defined by AI, TextGenEd collects early experiments in pedagogy with generative text technology, including but not limited to AI. The fully open access and peer-reviewed collection features 34 undergraduate-level assignments to support students’ AI literacy, rhetorical and ethical engagements, creative exploration, and professional writing text gen technology, along with an Introduction to guide instructors’ understanding and their selection of what to emphasize in their courses. (Oct. 2023 but I put the book here.)

Book Launch of TextGenEd: Teaching with Text Generation Technologies:

- Signs and Portents: Some Hints about What the Next Year in AI Looks Like by Ethan Mollick, One Useful Thing Ed’s Rec The article discusses the accelerated development of artificial intelligence (AI) and its impact on various aspects of society, emphasizing the need for proactive measures to navigate the challenges and opportunities presented by AI. Mollick highlights AI’s impact on work, its ability to alter the truth through deepfakes and manipulated media, and its effectiveness in education. (Jan. 6, 2023)

- How Will AI Disrupt Higher Education in 2024? By Racy Schroeder, Inside Higher Ed The article discusses the significant impact of generative AI on higher education, highlighting its potential to provide personalized learning experiences, assist faculty, and enhance course outcomes, while also addressing concerns about the emergence of artificial general intelligence (AGI) and its potential implications for education. (Jan. 6, 2024)

- Gender Bias in AI-Generated Images: A Comprehensive Study by Caroline Arnold in Generative AI on Medium (paywall). Arnold shares her findings about gender bias in the Midjourney generative AI algorithm when generating images of people for various job titles, highlighting that the AI model often fails to generate female characters in images, especially for professions where women are underrepresented. While the article is behind a paywall, you can probably find other articles on this topic. (Jan. 4, 2024)

- AI and Teaching College Writing A Future Trends forum discussion, again with Bryan Alexander (Jan. 4, 2024)

- The NYT vs OpenAI Is Not Just a Legal Battle by Alberto Romero, The Algorithmic Bridge This article explores the New York Times (NYT) lawsuit against OpenAI, focusing on the deeper disagreement regarding the relationship between morality and progress in the context of AI, suggesting that while pro-AI arguments emphasize the potential benefits of technology, there should be a more balanced consideration of its impact on society and creators’ rights. (Jan. 3, 2024)

- Empowering Prisoners and Reducing Recidivism with ChaptGPT by Krstafer Pinkerton, AI Advances. Note: The article in its entirety is behind a Members Only paywall, but perhaps you can find Pinkerton’s musings elsewhere. The article explores the potential use of AI language model ChatGPT in prisoner rehabilitation to reduce recidivism rates, emphasizing personalized learning experiences, a safe environment, and ethical considerations, while also highlighting future developments and calling for collective action to responsibly harness AI’s potential in this context. (Jan. 2, 2024)

- Envisioning a New Wave of AI on Campus with Bryan Alexander and Brent Anders This was a fun scenario exercise, in which participants were asked to imagine a future, with AI avatars as instructors. (Jan. 1, 2024)