**New Fall 2024** A Student Guide to Navigating College in the Artificial Intelligence Era As AI becomes integral to higher education, students face a new need to gain AI skills that will prepare them for the evolving landscape in college and life after graduation. Elon University and AAC&U collaborated to respond to this need by creating a this guide to help students navigate college in the era of artificial intelligence.

**New From MLA-CCCC: Oct. 2024: Third Working Paper** MLA-CCCC-Joint-Task-Force-WP-3-Building-Culture-for-Gen-AI-Literacy The working paper “Building a Culture for Generative AI Literacy in College Language, Literature, and Writing” by the MLA-CCCC Joint Task Force offers a measured and comprehensive approach to the use of generative AI (GAI) in educational settings. It neither fully endorses nor opposes AI use; rather, it emphasizes developing critical AI literacy. Here are the key area: Critical Literacy Focus; Establishing Student Learning Outcomes; Providing Educator Guidance; and Institutional and Program-Level Recommendations, among others. Issued: October 2024.

**New from MLA-CCCC: Student AI Guide**: Student_Guide_AI_Literacy

How to Write as if You Aim to Trick Others into Believing that You Are Generative AI This is an important essay by Lance Eliot, centering on the increasingly complex interactions between humans and generative AI—and its impact on our writing. (Forbes; July 27, 2024)

Impact Research on the Use of AI among Students According to this May 2024 survey, 82% of college undergrads use GenAI.

Optimizing AI in Higher Education: SUNY FACT² Guide, Second Edition Created by SUNY faculty and Ed Tech professionals. Very, very useful! (Summer 2024)

AI Policy Toolkit: Transparency Checklist Leon Furze provides school admins and faculty with a useful checklist covering the use of AI. Although the checklist has to do with k-12 school officials using AI, it is useful. From the blog post: By following this checklist, schools can help ensure they’re aligned with the Framework’s transparency principle, and more broadly with best-practice in implementing new technologies. It’s not just about ticking boxes, though. True transparency requires an ongoing commitment to open communication and a willingness to engage with all members of the school community. (July, 2024)

Developing a Model for AI across the Curriculum: Transforming the Higher Education Landscape Via Innovation in AI Literacy OK, not a 2024 article, but one that is very insightful. From the abstract: This position paper describes one possible path to address potential gaps in AI education and integrate AI across the curriculum at a traditional research university. The University of Florida (UF) is infusing AI across the curriculum and developing opportunities for student engagement within identified areas of AI literacy regardless of student discipline. (Computers and Edcuationa: Artificial Intelligence; Vol. 4 2023)

Other Collections of Articles |

- The Chronicle of Higher Education Archive of Ed Tech Articles

- Inside Higher Ed Archive of AI Articles

- NYTimes Archive of AI Articles

- WSJ Archive of AI Articles and Videos

- Dr. Heather Brown’s Repository of AI and Education Information

- Generative AI in Higher Ed: Academic Bloggers to Follow

The FDC 2024 Repository of Articles Note: This somewhat eclectic collection of articles have been taken primarily from higher ed sources, including bloggers. Articles from popular sources such as the NY Times do appear, but it is assumed that most SUNY New Paltz faculty will see those articles. A short description of each article appears after a link to the copy. If you have any problems with broken links, please email rigolinr@newpaltz.edu. And in keeping with full-disclosure, sometimes generative AI has been used as an editorial assistant!

December 2024

GenAI Use Cases in Education: Take a Look

One Year Later: The Impact of Generative AI on Education Study.com’s study looks at k-12, but the findings are applicable to higher ed as they are our soon-to-be students.

- How Will AI Influence Higher Ed in 2025? Inside Higher Ed interviewed 7 higher educational professionals about the future of AI. The consensus is that AI will become essential infrastructure for higher education, supporting teaching, research, and operations, with personalized AI tutors, adaptive learning, and AI-driven admissions becoming mainstream. Institutions will grapple with ethical concerns, accountability, and equity, while faculty, students, and administrators navigate the shift toward AI-enhanced learning, healthcare training, and academic governance. (Inside Higher Ed; Dec. 19, 2024).

- What Just Happened Mollick provides an overview of the last month, which he says, has seen a rapid surge in AI development, with numerous GPT-4-level and higher models emerging globally, some of which can run on personal devices, marking a shift from exclusive corporate control to broader accessibility. Breakthroughs include o1-pro’s ability to reason, check complex research errors, and support PhD-level problem-solving, while new AI models like Gemini and ChatGPT integrate live video and voice interaction, and video generators like Veo 2 produce nearly cinematic quality, highlighting a future where AI becomes ever-present in daily life. (One Useful Things; Dec, 19, 2024)

- On Capacity, Sustainability, and Attention Marc Watkins argues that faculty need to be given not only opportunities for professional development but the time to engage in professional development, when it comes to developing their AI skills and reevaluating their teaching and assessments with AI in mind. This is an excellent blog post that should be shared with administrators. (Rhetorica; Dec. 18, 2024)

- You Must See How Far AI Video Has Come Romero is mostly an AI cheerleader, so a reader should evaluate his assertions with a bit of skepticism. But when reviewing the examples he has provided of AI-generated videos, one walks away impressed with the technology. Take a look. (The Algorithmic Bridge; Dec. 18, 2024)

- To Use AI or Not Use AI? A Student’s Burden Daniel Cryer argues that the responsibility for upholding academic integrity has unfairly shifted to students, who are left to navigate vague AI policies. He suggests that this increased burden could be alleviated by providing AI-free spaces and empathizing with students’ struggles in adapting to this new educational landscape. (Inside Higher Ed; Dec. 9, 2024)

- 15 Times to Use AI, and 5 Not To In this end-of-year post, Mollick explores the practical applications and limitations of AI, suggesting it is most useful for tasks requiring quantity or generating ideas, but should be avoided in scenarios where deep learning, high accuracy, or human ethical judgment is essential. He emphasizes that using AI effectively requires a balance of skepticism, expertise, and awareness of its evolving capabilities and failure modes. (One Useful Thing; Dec. 9, 2024)

- Leon Furze’s Wrap Up of 2024: More from the Fence: Articles For and Against AI in Education Thank you Leon for organizing your posts/articles/research in such a readable and easy-to-access manner. For those of us on the fence, this is a great recap. (Leon Furze; December 6, 2024)

- Big AI Companies Need Higher Ed … but Does Higher Ed Need Them? Collin Bjork (Massey University, New Zealand) examines the growing dependency of universities on Silicon Valley AI companies and questions whether the benefits outweigh the financial, environmental, and ethical costs. He advocates for alternative AI solutions, which prioritize stewardship and local values over the extractive models of large ed-tech corporations. (Inside Higher Ed; Dec. 2, 2024)

- “A world divided into writes and write-nots is more dangerous than it sounds” This brief newsletter post from Harris Sockel references an October post by Paul Graham, warning us that in the Age of AI, we may be moving towards a world where some people know how to write while others do not. (Medium; AutomatED Dec. 2 ,2024)

- AI Tools for 2025 OK, so this list curated by Jennifer Wales (a self-described AI Engineer at Accenture, Writer, and Tech Geek)is from September, but it makes an interesting end-of-year list. Wales’s recommendations are hers, of course. (Medium).

November 2024

- Generative AI at the End of 2024 Bryan Alexander explores recent advancements in generative AI, focusing on developments like AI agents, new tools for video and audio creation, and open-source innovations such as Mixtral’s Pixtral. Highlighting the rapid pace of AI’s integration into various domains, Alexander also discusses challenges, such as accuracy and ethical concerns, while speculating on future applications like AI-driven browsers and interactive “Whisperverse” technologies. (AI, Academia, and the Future; Nov. 30, 2024)

- Getting Started with AI: Good Enough Prompting Ethan Mollick makes the point that effectively using AI requires practice and a “good enough” approach to prompting, rather than mastery of complex prompt engineering. By treating AI as an infinitely patient, forgetful coworker, users can experiment with task-specific and thinking prompts to better integrate AI into their workflows, ultimately developing intuition and confidence through hands-on experience. Yes, a fun look at AI prompting! (One Useful Thing; Nov. 24, 2024).

- AI ChatBot Can Conduct Research Interviews on Unprecedented Scale A new AI chatbot developed by the London School of Economics researchers can conduct large-scale research interviews with conversational approaches, offering nuanced insights, greater participant engagement, and cost efficiency compared to traditional method. Hmmmmmm. (Inside Higher Ed; Nov. 22, 2024)

- How to Reimagine Universities for the AI Era A deliberately provocative article by a Rutger’s professor. Mark Schaefer critiques the current higher education model, arguing it is too slow, costly, and rigid to meet the demands of an AI-driven world. He proposes replacing degrees and static curricula with lifelong, subscription-based learning programs focused on adaptability, personalized AI teaching agents, and multidisciplinary cohorts to prepare students for a rapidly evolving future. (Mark Schaefer on Medium; Nov. 18, 2024)

- IIs It Time to Regulate AI Use on Campus? Just wondering. The author highlights the growing need for comprehensive AI policies in higher education to address challenges like data security, ethical concerns, and inconsistent use across institutions. Examples from universities like UMass Amherst and Babson College showcase efforts to implement guidelines ensuring responsible AI use, with an emphasis on transparency, human accountability, and minimizing risks in high-impact areas. (Chronicle of Higher Ed; Nov. 11, 2024).

- Refusing AI in Writing Studies: A Quick Start Guide Jennifer Sano-Franchini, Megan McIntyre, and Maggie Fernandes argue for a nuanced approach to refusing generative AI (GenAI) in writing studies, emphasizing this refusal as a principled disciplinary stance rather than a reactionary rejection. They outline ten premises, including the ideological implications of technology, the importance of resisting linguistic homogenization, and the environmental and labor concerns tied to GenAI, framing refusal as a critical response to uphold the discipline’s values. Rather than banning GenAI outright, the authors advocate for cautious engagement, centering on ethical, pedagogical, and disciplinary goals while resisting the pressures of corporate-driven adoption. (Nov. 2024)

- Burn It Down: A License for AI ResistanceIn this article, Melanie Dusseau critiques the integration of generative AI into creative writing pedagogy, arguing that it undermines the ethical and imaginative practice of writing. She advocates for a subversive, resistance-oriented approach within academia, urging educators to reject AI’s intrusion into the arts and humanities, and instead focus on fostering human creativity and critical thinking in their students. (Inside Higher Ed; Nov. 12, 2024)

- The Near Future of GenAI in Education: Part Two This is a follow-up to Leon Furze’s blog post from back in September. In this post, Furze emphasizes the shift toward “offline AI” that can operate locally on devices, improving privacy and accessibility by bypassing cloud dependencies [Ed’s emphasis]. He also highlights the emergence of AI agents capable of performing autonomous tasks, and stresses the importance for educators to prepare for these disruptive technologies, recognizing the challenges and opportunities they bring to the field of education. (Leon Furze; Nov. 5, 2024)

- The Best Movies about AI From Disney’s The Sorcerer’s Apprentice (1940) to Forbidden Planet (1956), Blade Runner (1982; 2017) and more, these movies are cultural artifacts, providing “interesting things to say about AI.” Take a look at Bryan Alexander’s list! (AI, Academia, and the Future; Nov. 1, 2024)

- The Present Future: AI’s Impact Long before Superintelligence Ethan Mollick argues that while AI labs predict increasingly powerful models that could rival human expertise, today’s AI capabilities—such as multimodal processing, autonomous monitoring, and virtual assistants—are already impacting work and society in profound ways. He emphasizes that the decisions organizations make now about how to use AI, whether as a supportive tool or a monitoring mechanism, will set lasting precedents that shape the future of human agency in an AI-driven world. (One Useful Thing; Nov. 4, 2024)

- AI and the Myth of Personalized Learning Leon Furze critiques the concept of “personalized learning” as co-opted by tech companies, arguing that AI-driven education often prioritizes data collection and surveillance over true individualized learning. He contends that while AI may support certain learners, it fails to accommodate the diverse needs of students and risks reducing education to impersonal, algorithm-driven instruction rather than fostering genuine personal connection and human-centered learning. (Leon Furze; Nov. 1, 2024)

October 2024

- Claude Computer Use: The Next ChatGPT Moment Ed’s rec. In his article, Leon Furze reflects on his journey into the realm of digital texts and artificial intelligence, highlighting the evolution of language models from basic tools for content creation to more sophisticated systems like Anthropic’s Claude that can navigate and automate computer tasks. He emphasizes the urgent need for educators to understand these advancements and urges proactive engagement with Gen AI. (Leon Furze; Oct. 28, 2024)

- AI and Skill Loss: It’s Not that SimpleEd’s Rec. Leon Furze argues against the simplistic narrative that artificial intelligence in education will inevitably lead to skills loss, suggesting that this perspective undermines the broader role of teachers and overestimates the risks to student capabilities. Worth a read. (Leon Furze; Oct. 24, 2024)

- When You Give Claude a Mouse Ethan Mollick explores the capabilities and limitations of Anthropic’s new Claude agent, which demonstrates a degree of autonomy by executing complex tasks like creating lesson plans, playing strategy games, and navigating websites with minimal supervision. While the agent’s persistence and ability to strategize are promising, Mollick notes its current fragility. (One Useful Thing; Oct. 22, 2024)

- AI Detectors Falsely Accuse Students of Cheating—With Big Consequences The subtitle of this Bloomberg Businessweek article is: About two-thirds of teachers report regularly using tools for detecting AI-generated content. At that scale, even tiny error rates can add up. (Bloomberg Businessweek; Oct. 18, 2024)

- Follow the Quiet Voices to Find AI’s Truths In this article, Romero explores the polarized views around AI, likening it to a contentious political issue where people are pressured to take a side, especially as recent Nobel Prizes in Physics and Chemistry were awarded to AI researchers. He highlights the “synthetical thinkers” who resist simplistic binary views and prioritize nuanced, independent thinking, even if it means being misunderstood or overlooked amidst today’s divisive discourse. (The Algorithmic Bridge; Oct. 11, 2024)

- Off-Loading in the Age of Generative AI James DeVaney—the Associate Vice Provost for Academic Innovation at the Center for Academic Innovation at the University of Michigan–asks: As we adopt these tools, let’s pause to ask: What are we gaining, and what might we be missing? He argues: “It’s up to us to ensure that, even as we move faster, we don’t lose touch with the experiences that make our work—and our lives—meaningful.” (Inside Higher Ed; Oct. 10, 2024)

- AI-Generated Images Are Taking Over Google Image Results Behind a Medium paywall, but the title tells the story. Jim Clyde Monge discusses how Google Image results are increasingly dominated by AI-generated images, particularly as new AI tools like Midjourney and DALL-E make it easier for users to create photorealistic content. This influx of synthetic images is impacting users who rely on authentic photos, leading to frustration and concerns about misinformation, as well as prompting Google to introduce features like “About this image” to help users identify AI-generated content. (Generative AI: Oct. 9, 2024)

- 30 Things I’ve Learning about AI Yes, when Alberto Romeo creates a list it bears inspection! Item 20 made me smile: AI has become like politics; people have chosen their sides, and no amount of new information will change their stance, as they’ve locked themselves into a tribal identity instead of staying open to updates. (The Algorithmic Bridge; Oct. 2, 2024)

September 2024

ChatGPT Strategies to Enhance Your Class Sessions: Ed’s Rec. A truly useful webinar by Dan Levy, Harvard Kennedy School. You will walk away with concrete ideas about how you might use generative AI for various teaching and planning tasks. Excellent. (Tues. Sept. 24, 2024)

Cultivating Self-Worth in the Age of AI: 5 Exercises to Ensure Students Know Their Value in an AI-Inundated Workforce A practical set of exercise to help students “understand AI better and understand what they can do better than AI. This combined understanding lends itself to maintaining dignity in a professional world inundated with AI.” (Harvard Business Publishing; Sept. 26, 2024)

- The Near Future of Generative Artificial Intelligence in Education: September 2024 Update Ed’s Rec. Leon Furze does an excellent job of evaluating the current ed tech landscape. Furze discusses recent developments in generative AI, highlighting the shift from broad, general models like ChatGPT to more specific applications such as Google’s Notebook LM, which targets particular use cases like organizing research documents. Despite these innovations, the author notes that the rapid pace of AI advancements often catches people off guard, with new capabilities in models like Claude and Gemini surprising users with their ability to handle vast amounts of text and complex tasks, suggesting that unexpected breakthroughs will continue. (Leon Furze; Sept. 30, 2024)

- A Near Future Vision of AI in Higher Education Ray Schroeder envisions a near-future in higher education where generative AI plays a transformative role, replacing mid-level administrative tasks and potentially even adjunct teaching positions with intelligent agents. He emphasizes the rapid advancements in AI, such as OpenAI’s o1 model, which excels in reasoning and complex problem-solving, suggesting that universities must adapt to these technologies to remain competitive and efficient. (Inside Higher Ed; Sept. 25, 2024)

- Higher Education and AI: A September 2024 Update Bryan Alexander explores the growing impact of generative AI on higher education, highlighting student usage trends, AI’s role in research, and the persistent issue of AI-facilitated cheating. He notes that while many universities are investing in AI integration, students feel unprepared for AI in the workforce, and there is a call for more faculty training and better data on how students actually use AI. (AI, Academia & the Future; Sept. 22, 2024)

- AI Can Now Make (Surprisingly Good Podcasts) from Your Writing Ed’s Rec. Yes, it is true. The recent update to Google’s NotebookLM, particularly its new Audio Overview feature, is generating excitement for its ability to create AI-hosted podcasts that summarize and discuss user-submitted content with impressive accuracy and flow. (The Algorithmic Bridge; Sept. 20, 2024)

- To Teach Students to Use AI, Teach Philosophy Adam Zweber argues that teaching philosophy equips students with the critical thinking skills necessary to effectively interact with AI, as it emphasizes asking the right questions, engaging in open-ended dialogue, and critically evaluating outputs, much like the Socratic method. (Inside Higher Ed; Sept. 18, 2024)

- The AI-Rich and the AI-PoorEd’s rec. Alberto Romero responds to Reid Southen, who claims AI companies are demonstrating that they are “desperate” because they are hiking prices to offset losses. Romero sees this assessment as flawed. Instead, Romero argues, the price increases reflect AI companies’ forward-looking vision, developing more advanced reasoning AI that justifies higher costs, marking the end of the generative AI era and the beginning of a new, more expensive phase of AI development that will create a divide between those who can afford it and those who cannot. (The Algorithmic Bridge; Sept. 18, 2024)

- Expertise Not Included: One of the Biggest Problems with AI in EducationEd’s rec. Leon Furze argues that AI chatbots, such as AI tutors and Homework Helpers, are ineffective for education because they require users to have both expertise in the subject and the ability to use generative AI effectively. While recent AI advancements, like OpenAI’s o1 model, have shown progress, the author believes that more tailored, purpose-specific applications are needed to address the current limitations of chatbots in educational contexts. (Leon Furze; Sept. 18, 2024)

- More about GPT-o1 GPT-o1’s Arrival Is PivotalEd’s Rec. Graham Clay describes the release of OpenAI’s new o1 model as a paradigm shift in AI, significantly improving reasoning abilities and outperforming PhDs in complex tasks like physics and chemistry. He outlines the implications of o1 for educators, emphasizing that the model’s capacity to “pause to think” challenges previous assumptions about AI’s limitations and content-based immunity in education. (See Ethan Mollick’s article below as well.) (AutomatED; Sept. 16, 2024)

- Scaling: The State of Play in AI Ethan Mollick notes that AI capabilities are advancing rapidly, driven by both the increasing size of models and the introduction of a new scaling law focused on “thinking” after training. According to Mollick, AI will continue to grow in power, pushing the limits of what autonomous systems can achieve with minimal human intervention. (One Useful Thing; Sept. 16, 2024)

- When Access to AI Outpaces Attention Ed’s Rec. March Watkins argues that discussions about equity and access to AI are missing a crucial point: the rapid pace of AI deployment makes meaningful access nearly impossible as users cannot keep up with the overwhelming changes. He highlights the need for a slowdown and proper frameworks to process these developments, noting that without them, the current focus on equity is futile, and the real issue lies in how these rapid advancements are driven by the need to raise capital, rather than thoughtful adoption. (Rhetorics; Sept. 15, 2024)

- Something New: On OpenAI’s “Strawberry” and Reasoning Ethan Mollick discusses OpenAI’s new “Strawberry” enhanced reasoning system, which allows AI to “think through” complex problems, making it effective for tasks requiring planning and iteration, such as solving difficult physics problems and crossword puzzles. While the model represents a significant leap in problem-solving abilities, Mollick highlights its limitations, including occasional errors and hallucinations, and the evolving challenge of maintaining human oversight as AI becomes more autonomous. (One Useful Thing; Sept. 12, 2024)

- Exploring the TRAIL with Kevin Corcoran Lance Eaton discusses his involvement with TRAIIL, a new AI-infused learning repository at the University of Central Florida, which aims to provide openly licensed, peer-reviewed strategies for higher education. Kevin Corcoran explains the repository’s development, emphasizing its potential to serve as a central resource for AI-related lesson plans and instructional strategies, with long-term goals of becoming as widely used as OER Commons. (AI + Education = Simplified; (Sept. 11, 2024)

- AI Is Already Advancing Higher Education In this article, Ray Schroeder argues that generative AI is already enhancing higher education by improving student learning, faculty efficiency, and administrative operations, with tools like Grammarly, Khanmigo, and AI-powered research tools transforming daily practices. These AI tools also assist with personalized learning, predictive analytics for student success, and more efficient university operations, offering both students and staff greater resources and insights. (Sept. 10, 2024)

- Politicians Ramp Up the Pressure on AI In his post, Bryan Alexander highlights the growing involvement of political figures in AI regulation and usage across the globe. He discusses how the European Union, Australia, the United States, and even California are making strides to regulate AI technologies, including concerns over political deepfakes and economic risks. Alexander emphasizes that as political pressure on AI intensifies, firms must adapt to increased scrutiny. He concludes by suggesting that AI’s political, economic, and cultural impacts will continue to drive this regulatory trends. (AI, Academia, and the Future; Sept. 9, 2024)

- The Imperfect Tutor: Grading, Feedback and AI In her article, Patricia Taylor discusses the ethical and practical challenges of using AI for grading, noting that while some professors believe AI can handle elements like grammar and structure, she finds AI-generated feedback to be generic and potentially harmful to student development. Taylor advocates for a more nuanced approach, where AI might be helpful in specific contexts, but warns against over-reliance on it for meaningful assessment. (Inside Higher Ed; Sept. 6, 2024)

- Wake Up, Academia: The AI Revolution Waits for No OneEd’s Rec. Angela Virtu (Information Technologist at American U) argues that many higher education institutions are preparing students with outdated skills, while American University’s Kogod School of Business is leading the charge by ensuring every student graduates with a comprehensive understanding of AI. She emphasizes the importance of AI literacy for all students, faculty upskilling, and adapting curricula to keep pace with the rapid advancements in AI technology. (Inside Higher Ed; Sept. 6, 2024)

- 15 AI Ongoings Unfolding Right NowThe full article is behind a Substack paywall, but Alberto Romero makes some interesting observations, in particular one about voice assistants. He posits that “soon everyone will be using [them].” Romero also points out that the AI hype is over. Now, we will settle into what he refers to as the “sildent grinding phase . . . [where] people make sense of this tech we’ve built.” Good points. (The Algorithmic Bridge: Sept. 4, 2024)

- Students Are Using Gen AI to Prep for Cases. Don’t Worry (From Aug. 29, but moved up here). John Lafkas discusses how generative AI tools, like ChatGPT, can pose challenges to traditional case teaching by giving students easy access to case solutions. However, Lafkas emphasizes that AI can be used as a teaching tool to deepen discussions, enhance students’ analytical abilities, and shift the focus from finding the “right” answer to exploring diverse perspectives and critical thinking in case discussions. (Harvard Business Publishing)

August 2024

- Post-Apocalyptic Education Ethan Mollick discusses the “Homework Apocalypse,” where AI can now complete most traditional homework assignments, making them ineffective as learning tools. Despite the widespread adoption of AI by students (82% of undergraduates and 72% of K12 students in the U.S.), little has changed in educational practices. Mollick identifies two key illusions that hinder adaptation: the “Detection Illusion,” where teachers believe they can easily detect AI use, and “Illusory Knowledge,” where students think AI assistance helps them learn when it often undermines their understanding. Mollick argues that education needs to shift from traditional methods to incorporate AI more effectively, focusing on using AI as a tool to enhance thinking rather than replace it. (One Useful Thing: Aug. 30, 2024)

- The Generative AI Companion Movement Continues Bryan Alexander’s article explores the growing trend of generative AI companions—bots designed to act as human-like characters or companions. Unlike traditional chatbots, these AI companions, such as those offered by Character.ai, Replika, and others, present themselves as simulated human beings with names, personalities, and sometimes visual representations. Looking ahead, Alexander anticipates continued growth in the use of AI companions, alongside criticism and ethical debates. (Bryan’s Substack; Aug. 30, 2024)

**New: Excellent Exercise to Use with Students**:AI Images Are Already More Realistic Than You Think Furze discusses the creation of a web game called “Real or Fake?”where players identify AI-generated images from real ones, highlighting that while AI image realism has significantly improved, especially with models like Flux integrated into X (formerly Twitter), there are still subtle clues like shadows, symmetry, and reflections that can help spot fakes. The game underscores the challenges of detecting AI-generated content as technology advances, with only a few players achieving perfect scores by closely scrutinizing AI glitches. (Leon Furze; Aug. 24, 2024)

- **Great Resources** Bite-Size AI Content for Faculty and Staff Lance Eaton shares two new “5-tips” videos for faculty on using generative AI for course design and student engagement, along with a use case for creating effective FAQs from onboarding session transcripts using AI tools. (AI + Education = Simplified; Aug. 20, 2024)

- Struggling to Create AI Policies? Ask Your Students A professor at Florida International University had her students create their own AI usage policies, finding that they were stricter than she expected, which led to deeper discussions on ethics and academic integrity throughout the semester. This approach not only gave students a sense of ownership but also highlighted the need for universities to adopt more proactive and collaborative AI policies. (Inside Higher Ed: Aug. 22, 2024)

- Change Blindness – – WOW— Ethan Mollick takes his readers on a tour of how far AI has come in terms of image, video and audio creation. Amazing. (One Useful Thing; Aug 12, 2024)

- What Students Want: Key Results from Digital Education Council Global AI Student Survey 2024 A recent survey by the Digital Education Council revealed that 86% of students globally are using AI regularly in their studies, with ChatGPT as the most popular tool, but despite this widespread adoption, many students feel unprepared for an AI-driven workplace, prompting calls for universities to improve AI literacy, guidelines, and integration while addressing concerns about privacy, fairness, and over-reliance on AI in education. (DEC; Aug. 7, 2024)

- What Price Your ‘AI-Ready’ Graduates?Ed’s Rec. A provocative push back against voices arguing that generative AI will be in essential in the 21st c. work place. Beetham’s concept of teaching students to be “AI Resilient” (See Item 8) is intriguing. (Aug. 7, 2024)

- Exploring the Impact of ChatGPT on Business School Education: Prospects, Boundaries, and Paradoxes The study concludes that while generative AI offers promising prospects for business education, careful consideration and strategic implementation are crucial to harness its potential benefits while minimizing its risks. The authors advocate for a balanced approach that integrates AI into education thoughtfully, fostering an environment that supports both innovation and equity. (Journal of Management Education; Aug. 2024)

- You Guys Have No Idea Just How Much People Hate Generative AI Alberto Romero acknowledges technology’s transformative impact on humanity and the growing discontent towards AI, particularly generative AI. While noting the potential benefits of technology, Romero critiques tech giants for prioritizing profit over societal good, leading to public mistrust and skepticism towards AI, which is exacerbated by its perceived lack of necessity and ethical concerns. (The Algorithmic Bridge; Aug. 2, 2024)

- Not So Fast on Teaching AI “Skills” John Warner discusses the educational value of generative AI, emphasizing that its use in education is about more than just acquiring skills; it’s about fostering “thoughtfulness, curiosity, and creativity.” Warner argues that educators should focus on how AI can help students develop their ideas and critical thinking rather than merely automating tasks. (Inside Higher Ed; Aug. 2, 2024)

- Does AI in the Classroom Facilitiate Deep Learning in Students?Ed.’s Rec. This article examines a study at William & Mary, which explored the impact of an AI teaching assistant, CodeTutor, on student learning in an entry-level computer programming course. Students using CodeTutor showed improved course scores, but many found it inadequate for tasks requiring critical thinking, prompting them to seek human assistance. The study highlights the importance of generative AI literacy– particularly in crafting quality prompts. (Phys.org; Aug. 1, 2024)

- The Reality of Copilot for Microsoft 365: 10 Use Cases That Reveal Its True Potential Even though this is an industry article, selling a framework for adopting technology, this overview of the tasks that Copilot can do—along with the limitations of Copilot—- well is useful.

July 2024

- Our Responsibility to Teach AI to Students Ray Schroeder, senior fellow for UPCEA: the Association for Leaders in Online and Professional Education, argues that there is an urgent need for educators to teach students how to use generative AI technology, as their future careers depend on these skills. AI proficiency is becoming a critical job requirement, similar to past shifts in office tools. (Inside Higher Ed; July 31, 2024)

- AI Is Complicating Plagiarism. How Should Scientists Respond? The widespread use of generative AI in academic writing has raised concerns about its potential to facilitate plagiarism and copyright infringement, as it can mimic existing work without attribution. Some argue that AI-generated text should be considered “unauthorized content generation” rather than plagiarism, while others emphasize the ethical and legal implications. Despite these challenges, many researchers acknowledge AI’s benefits in improving clarity and accessibility in scientific writing, leading to calls for clearer guidelines and full disclosure of AI use. (Nature; July 30, 2024)

- More Practical Strategies for GenAI in Education Leon Furze runs through ways in which instructors are using tools such as ChatGPT and Claude to do tasks such as designing curriculum; adapting content for learners; and developing engaging assignments/lessons. (Leon Furze; July 26, 2024).

- Majority of Grads Wish They’d Been Taught AI in College Ed’s Rec A survey by Cengage Group reveals that 70% of recent college graduates believe generative AI should be part of college curricula, with over half feeling unprepared for the workforce. Concerns about AI replacing jobs and the lack of AI skills among graduates highlight a growing skills gap. Employers also seek hires with foundational AI knowledge, emphasizing the need for universities to adapt their curriculums to include AI training.

-

- Research Insights #12: Copyrights and Academia Eaton’s blog post highlights ongoing concerns about generative AI and copyright issues, particularly following Taylor & Francis’s sale of academic research to Microsoft without author consent. This has sparked significant concern among academics about transparency, consent, and authors’ rights. Key voices like Lauren Barbeau express worries about AI’s potential misrepresentation of research, while Martin Eve emphasizes the importance of understanding contract terms, and Lance Eaton critiques the exploitation by big publishers. The discussion underscores the need to rethink copyright laws and academic publishing practices in the context of generative AI. (AI + Education= Simplified; July 23, 2024)

- Confronting Impossible Futures Ethan Mollick highlights the uncertainty surrounding the future of AI, emphasizing the need for organizations—including institutions of higher education—to plan for multiple scenarios, including the possibility of Artificial General Intelligence (AGI). Despite significant investment and varying beliefs about AI’s trajectory, many organizations fail to incorporate AI advancements into strategic planning. Mollick advocates for proactive engagement with AI’s current capabilities and future potential to better navigate and influence the evolving technological landscape. (One Useful Thing; July 22, 2024)

- Higher Education Grapples with Generative AI Bryan Alexander collects some current topics being discussed in higher ed. Here is a rundown:

- Some institutions, like Morehouse College, are introducing AI-powered teaching assistants and individual AI tutors that enhance social learning, as exemplified by York University’s use of AITutorPro.

- There are also notable concerns among students about the potential for AI to create unfair advantages and contribute to misinformation, which has led to some universities, particularly in China, implementing stringent anti-AI cheating measures.

- There is an ongoing discussion about the role of liberal education in preparing students for a future shaped by AI, emphasizing interdisciplinary thinking and critical awareness. (Bryan’s Substack; July 20, 2024)

- The Resistance to AI in Education Isn’t Really about Learning Ed’s Rec. A provocative challenge to instructor resistance to AI. Peter Shea argues that the resistance to AI in education is rooted in deeper issues of trust and control rather than learning. Educators fear losing professional autonomy and their traditional roles, leading to resistance against integrating AI despite its potential to enhance learning experiences. Shea highlights the need for educators to reassess their roles and embrace AI as a tool to improve feedback and learning outcomes, rather than viewing it as a threat to their profession. (Medium; July 19,2024)

- Enhancing Learning through AI and Human Educators The author discusses the benefits of integrating AI with human educators, arguing that AI excels in personalization, accessibility, and scalability, while human educators provide emotional support, motivation, and creative instruction. The final recommendation is for a hybrid model leveraging both AI and human strengths. (eSchool News; July 15, 2024).

- Calling BS on the AI Education Future John Warner argues that embracing AI in education prioritizes productivity over the learning process, potentially undermining the fundamental aspects of teaching and learning. He critiques the mindset of venture capitalists who promote AI integration, suggesting it overlooks the importance of human interaction and the educational journey. (Inside Higher Ed; July 10, 2024)

- Animated AI TAs Come to Morehouse Morehouse College is introducing animated, AI-powered teaching assistants in five classrooms this fall. These AI avatars, trained from professors’ lectures and course materials, will provide students with 24/7 access to course-related information, enhancing the learning experience without replacing human faculty. (Inside Higher Ed; July 9, 2024)

- How Will the Rise of AI In the Workplace Impact Liberal Arts Education? Experts predict that skills like critical thinking and creativity will be more coveted as artificial intelligence replaces some technical jobs. As advanced AI tools become more prevalent in businesses, the demand for liberal arts majors is expected to rise due to their ability to address ethical implications and manage complex human interactions that AI cannot handle. College leaders need to adapt to these workforce changes, emphasizing the unique skills liberal arts education provides, such as ethical reasoning, creative problem-solving, and the ability to synthesize information. (Higher Ed Dive; July 8, 2024)

- Renowned Tech Analyst Urges Higher Ed Leadership in AI Tech Tech analyst and venture capitalist Mary Meeker pushes for universities to partner with businesses and adopt AI—and quickly. (Inside Higher Ed; July 8, 2024)

- The Myth of the AI First Draft Ed’s Rec. Everyone is a writer now. Leon Furze argues that the high value placed on writing in education can be detrimental, especially with the rise of generative AI, which is often used to create “AI first drafts.” This approach can undermine the development of genuine writing skills and critical thinking, as it bypasses the process of idea formation and personal expression that is essential to meaningful writing. (Leon Furze)

- When AI Triggers Our Impostor Syndrome Marc Watkins observes that many in academia, struggle with imposter syndrome, feeling unqualified and like they don’t belong. Embracing imposter syndrome can serve as a catalyst for empathy, curiosity, and humility in educational conversations. Modeling openness and courage is crucial in engaging students in discussions about emerging technologies like AI. (Rhetorica; July 5, 2024)

- How I Use ChatGPT as an Intuition Engine An interesting article about using ChatGPT, along with Google, to enhance the search experience. Romero says that ChatGPT’s strength lies in providing likely answers to imprecise queries offering a broad range of responses, which people can use to then craft a more precise query for Google. (The Algorithmic Bridge: July 5, 2024)

- Gradually, then Suddenly: Upon the Threshold Ed’s Rec. Mollick argues that small improvements have been leading to BIG changes (see images below—the prompts used in 2022 and 2023 are the same: “fashion photoshoot inspired by Van Gogh”). However, he also points out that technological change happens gradually . . . that it under certain thresholds of capability are passed. (One Useful Thing; July 4, 2024)

- What Does It Mean for Students to be AI-Ready? Ed’s Rec. Not everyone wants to be a computer scientist, a software engineer or a machine learning developer. We owe it to our students to prepare them with a full range of AI skills for the world they will graduate into, writes David Joyner. (Inside Higher Ed/Times Higher Ed; July 4, 2024) Image below from the article, showing people’s responses to AI:

- Why Writing Needs Good Friction Ed’s Rec. If you are interested in GenAI, higher education, writing, and pedagogy, take a look at this wonderful article by Leon Furze. He argues that in education, the push to reduce friction with tools like generative AI for writing can undermine the learning process, as struggling with initial drafts and overcoming challenges fosters deeper understanding and creativity. He provides links to articles/blogs by others, noting that his post is a “contribution to that much larger conversation about friction that seems to have progressed in the past few months around the implications of artificial intelligence and writing.” Furze provides his readers with a wonderful overview of what people who teach writing are thinking about GenAI. (Leon Furze; July 3, 2024)

- AI Reshapes Higher Ed and Society at Large by 2035 The writer argues that those of us in higher ed need to prepare “for the deep societywide changes that will take place in the next five to ten years.” As generative AI and autonomous agents become more prevalent, they will increasingly take on roles traditionally held by humans, prompting institutions to rethink their missions and approaches to education in a landscape where many jobs may be automated. (Inside Higher Ed; July 3, 2024)

- No One Knows How AI Works Alberton Romero says, “Don’t believe me? Ask ChatGPT.” As many researchers (and bloggers) have pointed out, neural networks, the driving force behind AI, remain enigmatic “black boxes’” that defy human understanding despite their widespread use in various applications. (The Algorithmic Bridge; July 3, 2024)

- Anatomy of an AI Essay The writer covers some “tells” instructors can use to identify AI-generated essays. Editor’s note: But be careful—some of these are characteristic of undergraduate writing! (Inside Higher Ed; July 2, 2024)

- AI Economics and What All Might Mean for the Future Bryan Alexander’s article covers emerging trends in the area of AI + business. (Bryan’s Substack; July 1, 2024)

- How Higher Ed Can Adapt to the Challenges of AI (sign into our library database to access article) Ed’s Rec. Joseph E. Aoun’s article discusses the transformative impact of artificial intelligence (AI) on human experience and emphasizes the crucial role of higher education in preparing students for an AI-driven world. He argues that universities must go beyond merely teaching technical skills and should focus on fostering a comprehensive understanding of AI’s implications across various aspects of life, including the physical, cognitive, and social selves. Aoun proposes an updated educational framework, “Humanics 2.0,” which integrates foundational AI knowledge, experiential learning, and lifelong learning to help students navigate and thrive in a rapidly evolving digital landscape. (The Chronicle; July 1, 2024)

- Google Studied Gen Z. What They Found Is Alarming Ed’s Rec. Here is a pull quote: “Within a week of actual research, we just threw out the term information literacy,” says Yasmin Green, Jigsaw’s CEO. Gen Zers, it turns out, are “not on a linear journey to evaluate the veracity of anything.” Instead, they’re engaged in what the researchers call “information sensibility” — a “socially informed” practice that relies on “folk heuristics of credibility.” In other words, Gen Zers know the difference between rock-solid news and AI-generated memes. They just don’t care. (Business Insider; July 1, 2024)

June 2024

AI Plagiarism: Part I: Lance Eaton evaluated 3 recent scholarly articles about students and GenAI. Here are the 3 main findings of the 3 articles:

Wecks, J. O., Voshaar, J., Plate, B. J., & Zimmermann, J. (2024). Generative AI Usage and Academic Performance. arXiv preprint arXiv:2404.19699. Main Finding: The study finds that students using GenAI tools, such as ChatGPT, score on average 6.71 points lower (out of 100) than non-users. This is statistically significant and indicates a notable negative impact on academic performance. The negative effect is more pronounced among students with high learning potential, suggesting that GenAI usage might impede their learning progress.

Zhang, M., & Yang, X. (2024). Google or ChatGPT: Who is the Better Helper for University Students. arXiv preprint arXiv:2405.00341. Main Finding: The study compared the effectiveness of ChatGPT and Google in assisting university students with academic tasks. Preference for ChatGPT: 51.7% of students preferred using ChatGPT for academic help-seeking, while 48.3% preferred Google. This indicates a slight preference for ChatGPT among the students surveyed.

Luo, J. (2024). How does GenAI affect trust in teacher-student relationships? Insights from students’ assessment experiences. Teaching in Higher Education, 1-16. Main Findings:

- Erosion of Trust: The rise of GenAI has led to increased suspicion and a perceived erosion of trust between students and teachers. Students fear being wrongly accused of cheating due to AI-mediated work.

- Transparency Issues: There is a lack of “two-way transparency” where students must declare their AI use and submit chat records, but teachers’ grading processes remain opaque. This creates a power imbalance and reinforces top-down surveillance.

- Risk Aversion: To avoid accusations of cheating, some students avoid using AI tools entirely, even for permissible tasks like grammar checking, due to ambiguous guidelines on AI use.

- Personal Connection: The lack of personal connection between students and teachers exacerbates distrust. Large class sizes and limited interactions prevent the development of individualized trust.

AI Plagiarism: Part II: In a follow up post, Eaton looks at how conversations with students might be handled. He begins, however, by noting: “I’m focusing this on traditional assignments. I’m also less and less and less a fan of these assignments.” Take a look at what Lance Eaton has to say about these conversations: When Students Use AI (June 20, 2024)

AI Plagiraism: Part III:Ed’s Rec. This is a great article about how to approach conversations with your students about GenAI. Lance Eaton goes into some depth about topics you might discuss. (June 26, 2024)

How to Use AI to Create Role-Play Scenarios for Your StudentsEd’s Rec. A really useful article by HBS’s Ethan and Lilach Mollick. Role-play exercises offer a unique educational tool for students, allowing them to explore and practice skills in a low-risk environment—and GenAI makes the process or creating tailored role-playing opportunities easier. (Harvard Business School; June 2024)

How to Humanize AI Content (12 Easy Steps)Ed’s Rec. This would be a good piece to share with students. Jalli’s (a blogger trying to monetize his advice about . . .blogging!) main points are true of any piece of writing, AI-generated or not. Helping students to identify where GenAI fails as a writer will help them understand what “makes” a good piece of writing. (Artturi Jalli; June 7, 2024)

Writing as “Passing”Ed’s Rec. A compelling piece by Helen Beetham noting that students have long felt exclused from academic discourse (pre-GenAI). Now, students must navigate a new landscape, where they can be accused of over-relying on GenAI. Beetham looks at ways instructors can enourage students to develop as writers. Well worth a look! (Imperfect Offerings; June 25, 2024)

A New Digital Divide: Student AI Use Surges, Leaving Faculty Behind Ed’s Rec. The title of the article says it all. (Inside Higher Ed; June 25, 2024)

-

Culture and Generative AI: An Update. Ed’s Rec. This piece by Bryan Alexander gives a snapshot of where we (Note: people in the US, mainly) are in terms of public perception and use of GenAI. There are some very interesting findings and conjectures! For instance, the divide over GenAI use in various fields is likely leading to many professionals using it . . . but being afraid to admit to its use for fear of being publically shamed. (Bryan’s Substack; June 26, 2024).

- Building Florida’s First AI Degree Program Miami Dade College just announced a new BS in applied AI. Listen to this Podcast, with Antonio Delgado, VP of Innovation and Technology Partnerships at Miami Dade. (Campus Technology; June 24, 2024)

- British Academics Despair as ChatGPT-Written Essays Swamp Grading Season Pull quote: ‘It’s not a machine for cheating; it’s a machine for producing crap,’ says one professor infuriated by the rise of bland essays. (Inside Higher Ed; June 21, 2024)

- AI Doesn’t Kill Jobs? Tell that to Freelancers On the one hand, freelancers are losing income due to GenAI, but the initial appeal of AI for companies is beginning to fade as CEOs realize the inferior quality of the content, making freelancers with strong writing/editing/revising skills valuable. (WSJ; June 21, 2024)

- Can GenAI Be Used to Support “Accidental” Asynchronous Learners in HyFlex Courses? An interesting article by HyFlex champion Brian Beatty. To learn more about Hyflex—and GenAI—peruse the article. (Hyflex Learning Community; June 21, 2024)

- Forget Cheating. Here’s the Real Question about AI in Schools. What are some of the questions? How are we thinking about redesigning learning for an AI world? What does that mean for skills for students? What does that mean for skills for teachers? What does teaching and learning need to look like in the AI world? (Education Week; June 21, 2024)

- Racist, Robotic, and Random: More Thoughts on Generative AI Grading Leon Furze argues against using generative AI for grading, citing concerns over bias, lack of transparency, and the potential to widen economic divides in education. He acknowledges the appeal of AI’s efficiency and consistency but emphasizes that AI cannot replace the nuanced judgment of human educators and could lead to the deprofessionalization of teaching. (Leon Furze; June 20, 2024)

- Latent Expertise: Everyone Is in R&D Ethan Mollick makes a great point about the fact that to optimize the use of AI systems, experts need to share their knowledge to enhance the overall learning experience. Educators and ed tech experts have been engaged in various projects around the world. We will need to engage in this R&D—and share our findings—to make GenAI implementation meaningful. (One Useful Thing; June 20, 2024)

- California Bill Would Prevent AI Replacement of Community College Faculty Well, that’s good news! (Inside Higher Ed; June 20, 2024)

- New UNESCO Report Warns that GenAI Threatens Holocaust Memory Ed’s Rec. This is a chilling report, and of course, one may read it as applying to other historical events. According to UNESCO, generative AI poses a threat to the accurate memory of the Holocaust by potentially spreading disinformation and fabricated content. The report urges the urgent implementation of ethical AI principles to prevent distortion of historical facts and ensure that future generations receive accurate education about the Holocaust. (UNESCO; June 18, 2024).

- Inside Barnard’s Pyramid Approach to AI Literacy Barnard College has introduced a pyramid approach to AI literacy, starting with basic understanding and gradually progressing to advanced AI applications, aiming to equip students and faculty with comprehensive AI skills while ensuring ethical considerations. (Inside Higher Ed; June 11, 2024) And here is another article from Educause about the AI Pyramid: A Framework for AI Literacy

-

An AI Boost for Academic Advising Advances in AI means that colleges can offer tailored support to students. The article discusses the way in which AI-powered tools are helping academic advisors. (Inside Higher Education; June 18, 2024)

- AI Plagiarism: Part 1: Plagiarism Detectors (They’re Not Our Friends) Generative AI plagiarism checkers have significant flaws and can produce false positives, particularly for non-native English speakers. Lance Eaton argues that encouraging faculty to have open conversations with students about their work (instead of just relying on plagiarism checkers) helps in understanding their processes and insights. (AI + Education = Simplified; June 17, 2024)

- AI with Dr. Jason Wrench, SUNY New Paltz (…and others)! (June 17, 2024)

A great overview of GenAI for non-techies. (AI Search; June 16, 2024)

- How AI Can Catch Up with Pedagogy (Not the other way around!) The article responds to criticism that pedagogy needs to catch up with advances in GenAI. As Furze points out, a lot of the information that chatbots provide to educators about pedagogy is outdated. Furze highlights the limitations of AI in understanding complex classroom dynamics and the necessity for technology developers to engage with educators to create meaningful and effective AI tools for education. (Leon Furze; June 12, 2024)

-

Doing Faculty Consultations Leon Furze reports on recent webinars he has been doing with college faculty. While Furze acknowledges concerns about generative AI, he calls for reframing the focus beyond ‘cheating’ aspects. (June 11, 2024)

-

Chatbots STILL aren’t the future of AI in education… so what is? Leon Furze argues that chatbots are not the future of AI in education due to their limitations. He believes that more advanced, multimodal AI systems, integrating technologies like image recognition and voice capabilities, offer greater potential. (June 11, 2024)

- Generative AI as Simulation Runner Bryan Alexander’s article discusses how generative AI can be used to create realistic simulations for educational purposes. These simulations can model complex systems and scenarios, providing interactive and immersive learning experiences. (Bryan’s Substack; June 10, 2024)

-

I’m a Computer Programmer and Wrote Professionally about ChatGPT for 1.5 Years — Here’s How Pro Writers Can Be Okay This article (June 9, 2024) by R. Paulo Delgado is behind a Medium paywall, so here are two pull quotes that sum up his main points:

- GenAI often provides inaccurate information and is lousy at math—always keep in mind that GenAI is not actually intelligent.

- ChatGPT has its uses for non-writers—just like Canva and CapCut have their uses for non-designers

- How Students Are Actually Using Generative AI: And What Educators Can Do to Adapt (Inspiring Minds; Harvard Business; June 6, 2024)

- Doing Stuff with AI: Opinionated Mid-Year Edition Ethan Mollick walks his readers through what he has been doing with GenAI. He explores the evolving capabilities and playful uses of AI, emphasizing practical applications alongside fun, interactive experiences. In addition, Mollick highlights advancements in Large Language Models, their integration with tools for creating music, images, and code, and their potential for transforming educational and professional tasks. (One Useful Thing; June 6, 2024)

- Living in the Generative Age Bryan Alexander argues that we are entering a generative age where AI tools create content on the fly, shifting from the traditional publication model that has defined information dissemination from 1400 to 2022. This transition changes user habits from searching for content to directly asking questions and receiving instant responses, raising discussions about the implications and potential short lifespan of this generative era. (Bryan’s Substack; June 1, 2024)

- Information Age Vs Generation Age Technologies for Learning Although David Wiley shared this to his blog back in April, Bryan Alexander references Wiley’s post in his article (above), so I wanted to keep them together. According to Wiley, The shift from the Information Age to the Generation Age in technology-mediated learning involves moving from distributing perfect copies of existing resources to using generative AI to create new, dynamic content in response to specific queries, fundamentally altering pedagogical approaches and supporting infrastructure. (Improving Learning; April 29, 2024)

May 2024

ChatGPT-4o (The “O” is for “Omni”)

**Excellent Resource**: Rethinking Assessment for Generative AI Leon Furze advocates for placing an emphasis on moving away from high-stakes, written assessments such as essays and tests, which are susceptible to generative AI “cheating.” He brings UNESCO guidelines into his comprehensive guide. (Leon Furze; May 2024)

Trends Snapshot: The Emerging Role of Gen AI in Academic Research(The Chronicle)

Introducing ChatGPT Edu OpenAI’s page about “an afforable offering for universiies to responsibly bring AI to campus.”

Policing AI Use by Counting “Telltale” Words Is Flawed and Damaging Lilian Schofield and Xue Zhou argue that paranoia over detecting AI-generated text in academia is flawed and damaging. This approach may unfairly target non-native English speakers and overlook the diversity of English usage. They advocate for teaching responsible AI use instead of stigmatizing familiar words and phrases as indicators of AI involvement, as this paranoia can stifle creativity and misjudge genuine academic efforts. (Times Higher Ed; May 3, 2024)

-

New ChatGPT Version Aiming at Higher Ed OpenAI introduced ChatGPT Edu, a new AI toolset for higher education institutions focused on tutoring, writing grant applications, and résumé reviews, with privacy safeguards and input from universities like ASU, University of Pennsylvania, and Oxford. This new development is prompting both cautious optimism and worries. (Inside Higher Ed; May 31, 2024)

- Research Insights #9: Student-Focused Studies, Part 6 Lance Eaton regularly reviews the content of research articles and then summarizes their findings. In this post, he looks at three recent scholarly journal articles. His main findings are: 1) ChatGPT provides immediate answers, saving students time compared to traditional search engines like Google; and 2) ChatGPT aids in overcoming ‘brain freeze’ by providing inspiration for starting assignments. However, there are a number of challenges including the often noted point that institutions may need to reconsider assessment methods to counteract academic misconduct facilitated by ChatGPT. (AI + Education = Simplified; May 29, 2024)

- Don’t Use GenAI to Grade Student Work In this post, Leon Furze argues against using Generative AI (GenAI) for grading student work, highlighting issues of inconsistency, bias, and lack of true understanding. He contends that while AI can provide superficial improvements, it cannot replace the nuanced, empathetic judgment of human educators, and its use may exacerbate existing inequities in education. (Leon Furze; May 27, 2024)

- The Great AI Challenge: We Test Five Top Bots on Useful, Everyday Skills In a detailed comparison of five top AI chatbots—OpenAI’s ChatGPT, Microsoft’s Copilot, Google’s Gemini, Perplexity, and Anthropic’s Claude—each was evaluated for their performance in various real-life tasks. The results varied across categories, with Perplexity emerging as the overall winner for its concise and accurate responses, while ChatGPT excelled in health advice and cooking but lagged behind in creative writing. Despite rapid advancements and upgrades, no single chatbot consistently outperformed the others in all areas, showcasing their unique strengths and weaknesses. (WSJ; May 25, 2024)

- A Provocation for Generating AI Alternatives Helen Beetham’s discusses alternatives to the current AI development, emphasizing human-centric and democratic approaches. She shares insights from a workshop on generating AI alternatives, focusing on collaborative and creative methods to reframe AI’s role in society. What follows is a brief video, in which Beetham highlights concerns about AI models memorizing copyrighted materials, which impacts cultural assessments and echoes dystopian warnings about the loss of originality and freedom.

- Most Researchers Use AI-Powered Tools Despite Distrust Despite widespread distrust of AI companies, over 75% of researchers use AI tools in their work, primarily for tasks like discovering, editing, and summarizing research. Ed’s note: Perhaps an alternate approach—as Suzanne Massie once taught Ronald Regan—doveryai, no proveryai (доверяй, но проверяй) “Trust but Verify.” (Inside Higher Ed; May 24, 2024)

- Treat AI News Like a River, Not a Bucket Medium writer Alberto Romero notes that it is challenging to sift through all the AI information that is being churned out by bloggers (like himself), news outlets, industry PR feed, and scholars. The solution? Adopting a mindset of treating the ‘to read’ pile like a river instead of a bucket and plucking choice items from the ‘river’ rather than feeling compelled to empty an unmanageable ‘bucket’. Point noted. (Medium; May 24, 2024)

- How Two Professors Harnessed GenAI to Teach Students to Be Better Writers Two professors at Carnegie Mellon University developed a tool called myScribe, leveraging “restrained generative AI” to help students improve their writing by converting notes into prose, thus reducing the cognitive load of sentence generation and allowing students to focus more on their ideas and overall structure. This approach aims to prevent misuse and “hallucinations” of AI by restricting the AI’s output to the student’s own notes, enhancing the writing process without compromising the quality of thought or expression. (FASTCompany; May 23, 2024)

- How Generative AI Tools Assist with Lesson Planning Pull quote from a K-12 instructor: “My colleagues and I utilize MagicSchool’s suggested 80/20 approach of using artificial intelligence to help with designing a lesson. AI does the bulk of the initial work, which we review for bias and accuracy. Then we step in and take care of the rest, which amounts to about 20 percent of the task.” This article’s main focus is on MagicSchool.ai. (Edutopia; May 22, 2024)

- Are AI Tutors the Answer to Lingering Learning Loss? As some k-12 schools embrace chatbot tutors, other are still weighing the pros and cons. AI tutoring tools, such as Khan Academy’s Khanmigo powered by OpenAI’s GPT-4, offer significant advantages over human tutors including lower costs, which support equitable access to education. As these tools continue to improve, they may present a promising solution for addressing lingering learning loss. (Ed Tech: Focus on K-12; May 22, 2024)

- Colleges Bootstrap Their Way to AI Literacy Goldie Blumenstyk discusses how colleges are creatively building their AI expertise without needing large budgets or extensive computer-science faculties. She cites five different institutions: Metropolitan State University of Denver, Randolph College, Hudson County Community College, Marshall University, and Camden County College. (The Edge: Chronicle of Higher Ed); May 22, 2024)

- The AI-Augmented Nonteaching Academic in Higher Ed The article looks at the increasing role of AI in non-teaching aspects of higher education, such as administration, research, and student support services. It highlights the potential benefits, like efficiency and cost savings, but also raises concerns about job displacement and the need for educators to adapt to these changes. Overall, it emphasizes the importance of leveraging AI responsibly to enhance rather than replace human involvement in academia. (Inside Higher Ed; May 22, 2024)

- Empowering Student Learning: Navigating AI in the College Classroom Through student surveys, it was found that students prefer to use AI to improve their work rather than replace their own efforts. The article suggests strategies for educators to guide students in responsibly using AI, balancing AI use with critical thinking, and fostering collaboration. (Faculty Focus; May 22, 2024)

- AI Grading Is Already as ‘Good as an Overburdened’ Teacher, but Researchers Say It Needs More Work The article discusses early research indicating that AI systems like ChatGPT are nearly as effective as overburdened teachers at grading essays but warns that these AI tools need further improvement before being used for high-stakes grading. (The Hechinger Report; May 20, 2024)

- AI’s New Conversation Skills Eyed for Education The new version of ChatGPT, GPT-4o, which features enhanced human-like verbal communication. This upgrade allows for real-time conversations, emotion simulation, and improved language translation, making it a potential tool for personalized learning and tutoring. Educators see opportunities for tailored instruction, mock interviews, and deeper student engagement. (Inside Higher Ed; May 17, 2024)

- GPTs for Scholars: Enablers of Shoddy Research? Despite resolving issues of fake citations, GPTs could enable shoddy research by allowing researchers to cite without proper engagement with the literature. The authors emphasize the need for further assessment, guidelines, and training to ensure the responsible use of these AI tools. (Inside Higher Ed; May 16, 2024)

- Students Pitted against ChatGPT to Improve Writing New online courses at the University of Nevada, Reno, are designed to educate future educators about the limitations and potential of artificial intelligence (AI) through competitive writing tasks. Students in two courses are required to compete against ChatGPT in writing assignments, emphasizing the creative and intellectual strengths humans bring over AI tools. (Inside Higher Ed; May 15, 2024)

- AI Has Changed Learning, Why Aren’t We Regulating It? According to Marc Watkins’s sobering analysis, Educators need to be prepared for the changes AI tools like GPT-4o bring to the learning process. Existing regulations, such as FERPA, COPPA, IDEA, CIPA, and Section 504, must be considered for AI tools in education. (Rhetorics; May 14, 2024)

- OpenAI GPT-4o: The New Best AI Model in the World. Like in the Movies. For Free. Behind a Medium paywall. Romero notes that “GPT 40 stands out as a multimodal end-to-end model, enabling processing of text, audio, voice, video, and images simultaneously, showcasing capabilities comparable to those seen in movies.” (Alberto Romero; May 13, 2024)

- GenAI Strategy: Attack Your Assessment Ed’s Rec Leon Furze, as usual, is on target, this time with this call to revisit faculty approaches to assessment. The central theme is the necessity for educators, regardless of their field, to critically evaluate and, if necessary, revise their assessment strategies. This involves using GenAI tools to simulate student responses to current assessment tasks, thereby revealing vulnerabilities. The author provides practical steps for educators to engage with AI technology during faculty meetings and use it to test the robustness of their assessment tasks. (Leon Furze; May 13, 2024)

- Experts Predict Major AI Impacts in New Report The Educause report, which includes the opinions of higher ed and tech experts, highlights the potential transformation AI can bring to teaching, learning, student support, and institutional management. It emphasizes the importance of preparing educators and institutions for this AI-driven shift in education to maximize its benefits. (Inside Higher Ed; May 13, 2024)

- The Good, the Bad, & the Unknown of AI In a keynote at UMass, Lance Eaton focused on both the challenges and successes of generative AI. Two important points: AI can assist in navigating tedious tasks and projects, minimizing energy drain and AI’s fallibility can heighten awareness and scrutiny of the information received (AI + Education = Simplified; May 13, 2024)

- Harnessing the Power of Generative AI: A Call to Action for Educators Ripsimé K. Bledsoe (Texas A&M) argues that if educators steer the integration of AI with intention and purpose, it can reach its potential as an immense and versatile student success tool. She offers six areas on which to focus efforts. (Inside Higher Ed; May 10, 2024)

- No One Is Talking about AI’s Impact on Reading AI assistants can now summarize and query PDFs (this editor uses it), highlighting the potential impact on reading skills. While AI assistants can help many readers, including neurodiverse and second-language learners, these tools may wind up hindering students’ ability to critically engage with texts and form nuanced conclusions. Watkins suggests active reading assignments, social annotation tools, and group discussions can encourage students to focus on the act of reading and not rely solely on AI assistants. (Rhetorics; May 3, 2024)

- Ditch the Detectors: Six Ways to Rethink Assessment for Generative Artificial Intelligence Ed’s Rec Leon Furze again writes a prescient article, this time about assessment. (Leon Furze; May 3, 2024)

- GenAI Strategy for Faculty Leaders Ed’s Rec. Leon Furze offers a blueprint for developing AI guidelines. A great resource. (Leon Furze; May 1, 2024)

- New AI Guidelines Aim to Help Research Libraries The Association of Research Libraries announced a set of seven guiding principles for university librarians to follow in light of rising generative AI use. (Inside Higher Ed; May 1, 2024)

April 2024

AI Detection in Education Is a Dead End Researcher-PhD candidate Leon Furze’s blog post is a well-written exploration—and explanation—about AI detection software and its impact on students. For those who don’t want to wade through the study below, this is a friendly interpretation of some of the findings. (April 9, 2024)

Desiging and Assessing Generative AI Student Projects This Adobe for Education discussions includes three faculty members who teach writing, one of them, Shelley Rodrigo, the president of the National Council of the Teachers of English (NCTE). Great discussion:

**New Study**GenAI Detection Tools, Adversarial Techniques and Implications of Inclusivity in Higher Education From the article’s abstract: The results demonstrate that the detectors’ already low accuracy rates (39.5%) show major reductions in accuracy (17.4%) when faced with manipulated content, with some techniques proving more effective than others in evading detection. The accuracy limitations and the potential for false accusations demonstrate that these tools cannot currently be recommended for determining whether violations of academic integrity have occurred, underscoring the challenges educators face in

maintaining inclusive and fair assessment practices. However, they may have a role in supporting student learning and maintaining academic integrity when used in a non-punitive manner.The AI-Writing Paradox Ed’s Rec. Blogger/Educator Debra Lawal’s article discusses recent cases where well-regarded professional writers have admitted to use GenAI for various tasks and asks her readers to consider not only the usefulness of AI tools such as Grammarly, but also ways in which we can develop a distinct voice in the age of AI. This blog post would be a great one to assign to students. (Debra Lawal; April 12, 2024)

- Responsible AI and the ‘Future of Skills’ A gathering hosted by ETS delved (!) into how AI could change how students are tested and how employers assess skill (Inside Higher Ed; April 26, 2024)

- Good Ideas: When to Use AI for Brainstorming Another great blog post by Leon Furze, making the point that humans may be better off trying to brainstorm without AI first. Great read. (Leon Furze; April 25, 2024)

- Making Meaning with Multi-Modal GenAI Leon Furze has become an important voice when it comes to reflecting upon genAI. Take a look at this overuse of multi-modal communication. (Leon Furze; April 23, 2024)

- Students Putting AI to Work A brief article from Gonzaga University, illustrating gen AI applications in the classroom and student attitudes to the technology. (April 23, 2024)

- Another Workshop for Faculty and Staff Lance Eaton reflects on a recent faculty workshop and provides access to his slide deck and other material. (AI + Education= Simplified; Lance Eaton; April 23, 2024)

- Innovation Through Prompting: Democratizing Educational Technology In this post, Ethan Mollick examines how gen AI enables non-techies to leverage tech tools in interesting ways. (One Useful Thing; April 22, 2024)

- AI and the End of the Human Writer The article explores the impact of AI on writing, noting how language models like ChatGPT can produce diverse literary forms without experiencing the existential doubts that plague human writers. Will true human writing, with its quirks and authenticity, survive? The author is not so sure. (The New Republic; April 22, 2024)

- It’s the End of the Web as We Know It (The Atlantic; April 22, 2024)

- On Building an AI Policy for Teaching and Learning (with a link to the actual document here); Lance Eaton, working with students and faculty from College Unbound created well-thought out guidelines for both student and faculty use of AI. (AI + Education= Simplified; Lance Eaton; April 17, 2024)

- Generative AI Doesn’t ‘Democratize Creativity‘ Ed’s Rec Leon Furze’s answer to Ethan Mollick. Great! (Leon Furze; April 16, 2024)

- AI and Higher Education: Scenes from ASU-GVS (Arizona-State-Global-Silicon-Valley, yes, it’s a thing, a big thing. And this year, gen AI was at the center of the discussion. (Bryan’s Substack; 16 April 2024)

- Annual Provosts’ Survey Shows Need for AI Policies, Worries Over Campus Speech The title says it all. (Inside Higher Ed; April 16, 2024)

- 9 Point Action Plan: For Generative AI Integration into Education Brent Anders blog about his new book. (SOVOREL; April 14, 2024)

- What Just Happened, What Is Happening Next Ed’s Rec. Ethan Mollick gives his readers an update on the tasks that generative AI is now able to do well. According to Mollick, the capabilities of LLM are doubling every 5-14 months, which is impressive. As usual with Mollick’s posts, this is well worth the read. (One Useful Thing: April 9, 2024).

- Research Insights #7: Open Educational Resources & Generative AI Part I Ed’s Rec. This is Lance Eaton’s latest commentary on scholarly articles about generative AI. This research looked at AI + OER within the context of UNESCO’s recommendations about OER. Eaton notes that insights from the study can guide educational developers in creating strategies that leverage AI to increase student engagement and participation. (AI + Education = Simplified; April 9, 2024)

- More Teachers Are Using AI Detection Tools: Here’s Why That May Be a Problem The article’s title indicates the topic. (Education Week; April 5, 2024)

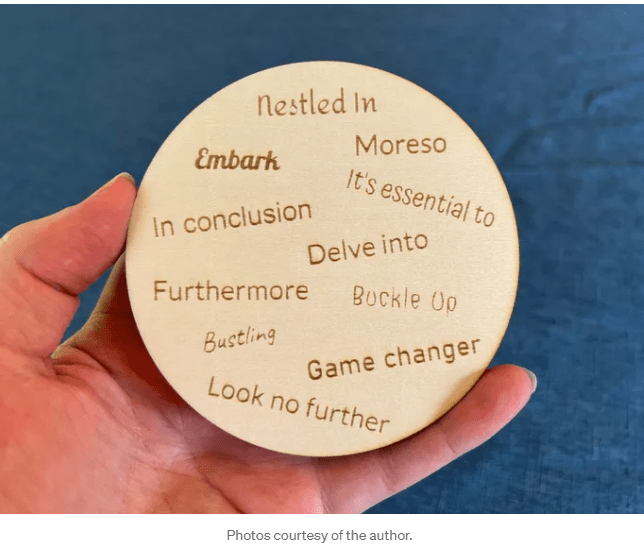

- I Turned ChatGPT’s Horrible Writing Cliches into a Handmade Coaster Behind a paywall, but you don’t really need to read it. The lovely coaster is pictured below. (The Generator; April 2, 2024)

March 2024

Report: The Advantages that AI Brings to Higher Ed (Link to report included) A report highlights AI’s potential to enhance higher education through student support and data analysis, emphasizing the importance of equitable access and culturally aware design to prevent a new digital divide and ensure HBCUs and MSIs benefit without falling behind. (Diverse Issues in Higher Education; March 13, 2024)

- Delving into “Delve” One of genAI’s most overused verbs is “delved.” This blog post shows its exponential use in academic abstracts! (Philip Shapira; March 31. 2024)

- AI-Generated Garbage Is Polluting Our Culture Peer reviewers are using gen AI to write their reviews? Apparently. This is just one cultural shift that Erik Hoel derides in this critique of gen AI (NYTimes; March 31,, 2024)

- On the Necessity of a Sin: Why Treating AI Like a Person Is the Future Ed’s Rec. Well worth the read. Ethan Mollick looks at AI anthropomorphization. (One Useful Thing: March 30,2024)